FreeBSD DVS: FreeBSD 2014 Flame Graphs

Video: https://www.youtube.com/watch?v=fMV1upo88ZwTalk for the FreeBSD 2014 Dev and Vendor summit on flame graphs, by Brendan Gregg, Netflix.

| next prev 1/66 | |

| next prev 2/66 | |

| next prev 3/66 | |

| next prev 4/66 | |

| next prev 5/66 | |

| next prev 6/66 | |

| next prev 7/66 | |

| next prev 8/66 | |

| next prev 9/66 | |

| next prev 10/66 | |

| next prev 11/66 | |

| next prev 12/66 | |

| next prev 13/66 | |

| next prev 14/66 | |

| next prev 15/66 | |

| next prev 16/66 | |

| next prev 17/66 | |

| next prev 18/66 | |

| next prev 19/66 | |

| next prev 20/66 | |

| next prev 21/66 | |

| next prev 22/66 | |

| next prev 23/66 | |

| next prev 24/66 | |

| next prev 25/66 | |

| next prev 26/66 | |

| next prev 27/66 | |

| next prev 28/66 | |

| next prev 29/66 | |

| next prev 30/66 | |

| next prev 31/66 | |

| next prev 32/66 | |

| next prev 33/66 | |

| next prev 34/66 | |

| next prev 35/66 | |

| next prev 36/66 | |

| next prev 37/66 | |

| next prev 38/66 | |

| next prev 39/66 | |

| next prev 40/66 | |

| next prev 41/66 | |

| next prev 42/66 | |

| next prev 43/66 | |

| next prev 44/66 | |

| next prev 45/66 | |

| next prev 46/66 | |

| next prev 47/66 | |

| next prev 48/66 | |

| next prev 49/66 | |

| next prev 50/66 | |

| next prev 51/66 | |

| next prev 52/66 | |

| next prev 53/66 | |

| next prev 54/66 | |

| next prev 55/66 | |

| next prev 56/66 | |

| next prev 57/66 | |

| next prev 58/66 | |

| next prev 59/66 | |

| next prev 60/66 | |

| next prev 61/66 | |

| next prev 62/66 | |

| next prev 63/66 | |

| next prev 64/66 | |

| next prev 65/66 | |

| next prev 66/66 |

PDF: FreeBSD2014_FlameGraphs.pdf

Keywords (from pdftotext):

slide 1:

FreeBSD Developer and Vendor Summit, Nov, 2014 Flame Graphs on FreeBSD Brendan Gregg Senior Performance Architect Performance Engineering Team bgregg@ne5lix.com @brendangreggslide 2:

slide 3:

Agenda 1. Genesis 2. Genera=on 3. CPU 4. Memory 5. Disk I/O 6. Off-‐CPU 7. Chainslide 4:

1. Genesisslide 5:

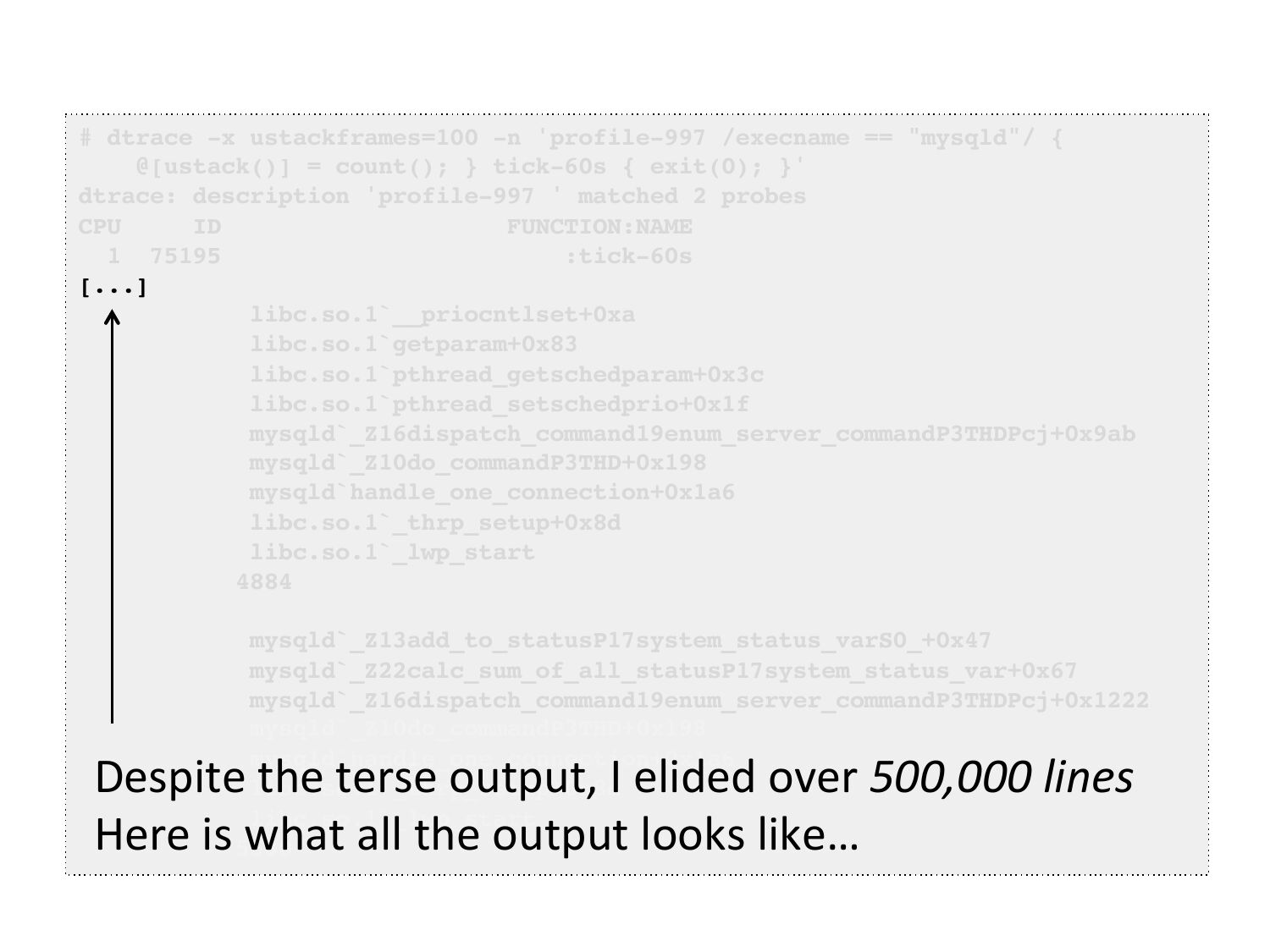

The Problem • The same MySQL load on one host runs at 30% higher CPU than another. Why? • CPU profiling should answer this easilyslide 6:

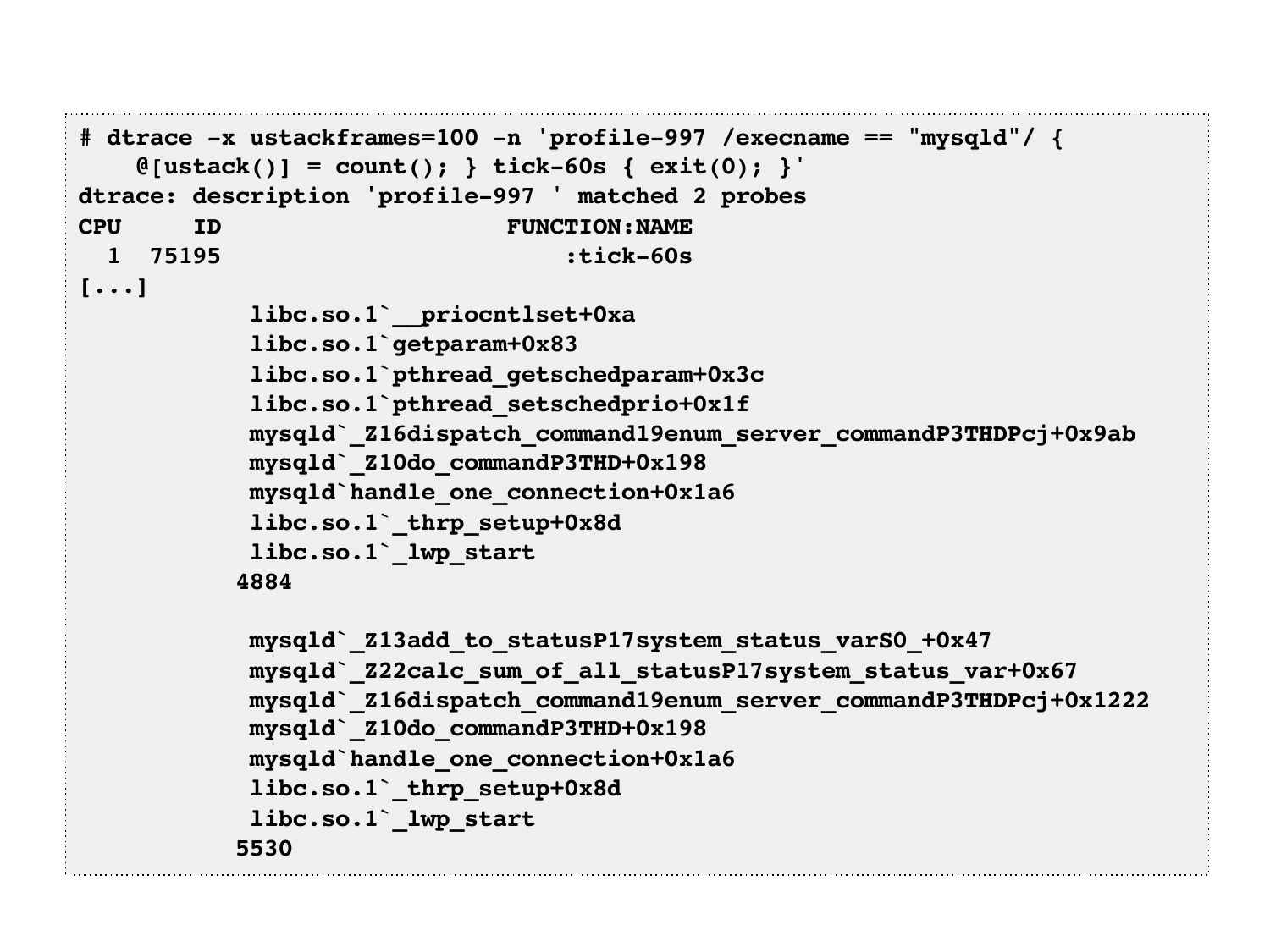

# dtrace -x ustackframes=100 -n 'profile-997 /execname == "mysqld"/ {!

@[ustack()] = count(); } tick-60s { exit(0); }'!

dtrace: description 'profile-997 ' matched 2 probes!

CPU

FUNCTION:NAME!

1 75195

:tick-60s!

[...]!

libc.so.1`__priocntlset+0xa!

libc.so.1`getparam+0x83!

libc.so.1`pthread_getschedparam+0x3c!

libc.so.1`pthread_setschedprio+0x1f!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x9ab!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

4884!

mysqld`_Z13add_to_statusP17system_status_varS0_+0x47!

mysqld`_Z22calc_sum_of_all_statusP17system_status_var+0x67!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x1222!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

5530!

slide 7:

# dtrace -x ustackframes=100 -n 'profile-997 /execname == "mysqld"/ {!

@[ustack()] = count(); } tick-60s { exit(0); }'!

dtrace: description 'profile-997 ' matched 2 probes!

CPU

FUNCTION:NAME!

1 75195

:tick-60s!

[...]!

libc.so.1`__priocntlset+0xa!

libc.so.1`getparam+0x83!

libc.so.1`pthread_getschedparam+0x3c!

libc.so.1`pthread_setschedprio+0x1f!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x9ab!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

4884!

mysqld`_Z13add_to_statusP17system_status_varS0_+0x47!

mysqld`_Z22calc_sum_of_all_statusP17system_status_var+0x67!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x1222!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

5530!

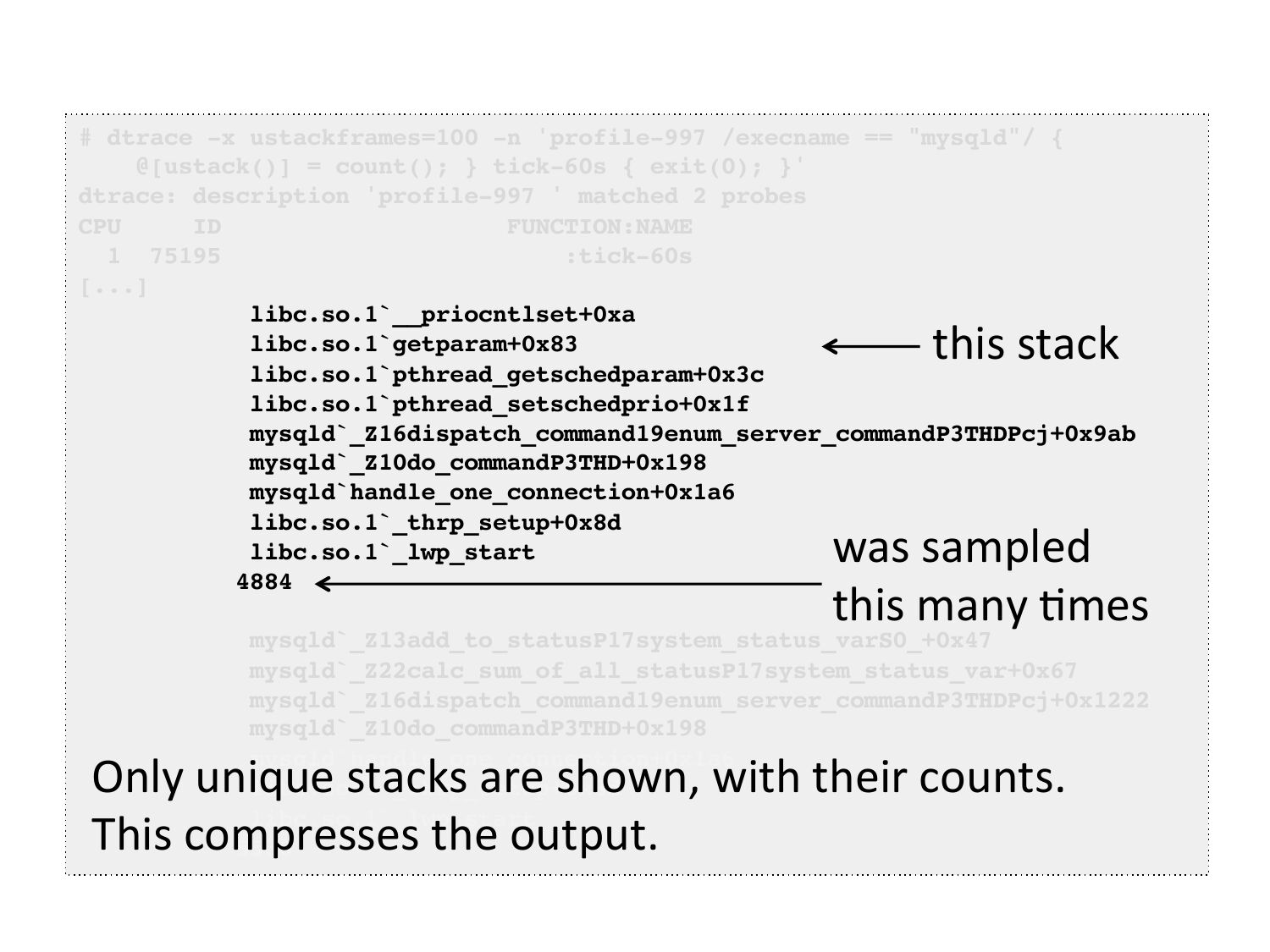

this

stack

was

sampled

this

many

=mes

Only

unique

stacks

are

shown,

with

their

counts.

This

compresses

the

output.

slide 8:

# dtrace -x ustackframes=100 -n 'profile-997 /execname == "mysqld"/ {!

@[ustack()] = count(); } tick-60s { exit(0); }'!

dtrace: description 'profile-997 ' matched 2 probes!

CPU

FUNCTION:NAME!

1 75195

:tick-60s!

[...]!

libc.so.1`__priocntlset+0xa!

libc.so.1`getparam+0x83!

libc.so.1`pthread_getschedparam+0x3c!

libc.so.1`pthread_setschedprio+0x1f!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x9ab!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

4884!

mysqld`_Z13add_to_statusP17system_status_varS0_+0x47!

mysqld`_Z22calc_sum_of_all_statusP17system_status_var+0x67!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x1222!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

5530!

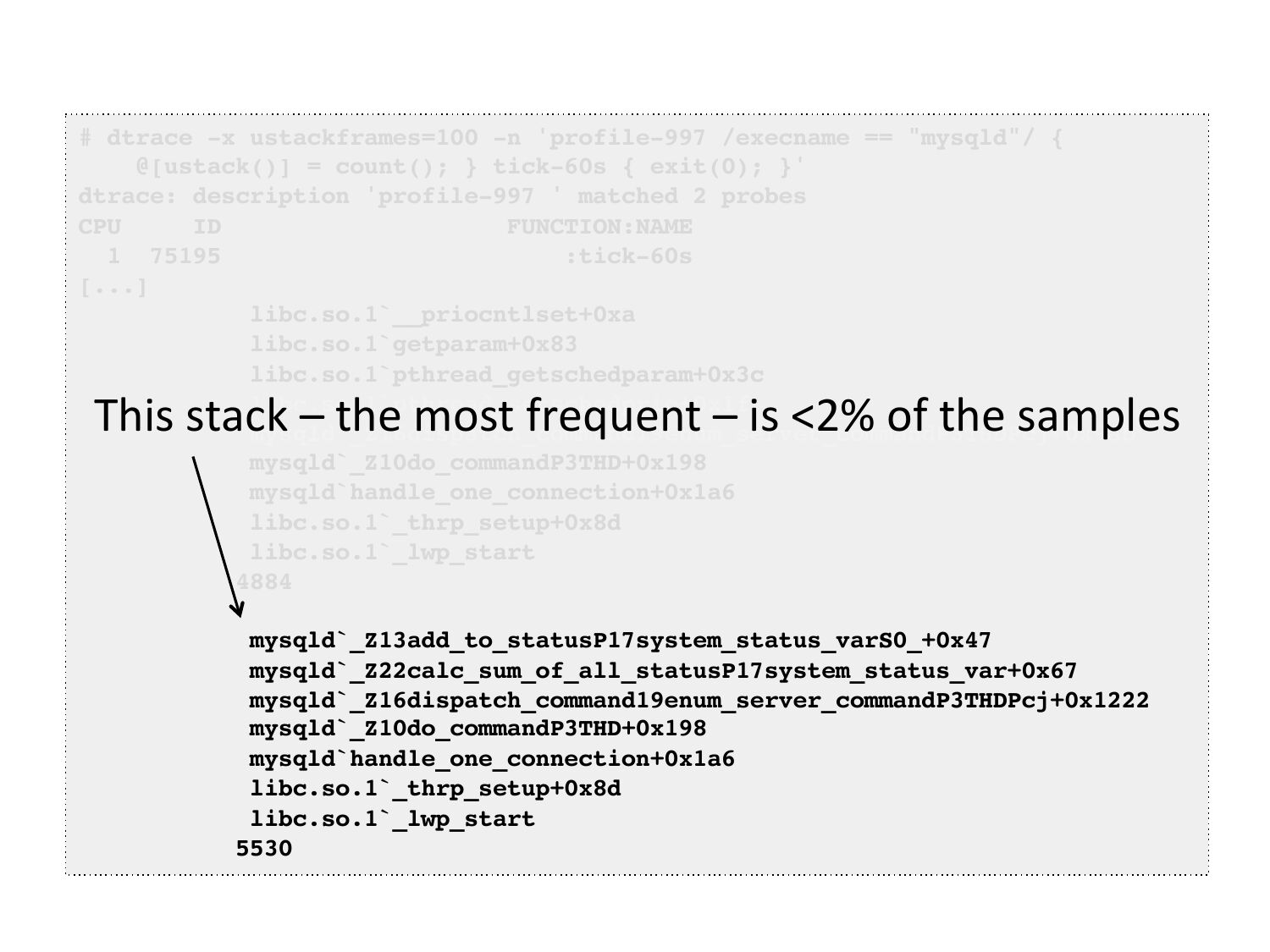

This

stack

–

the

most

frequent

–

is

slide 9:

# dtrace -x ustackframes=100 -n 'profile-997 /execname == "mysqld"/ {!

@[ustack()] = count(); } tick-60s { exit(0); }'!

dtrace: description 'profile-997 ' matched 2 probes!

CPU

FUNCTION:NAME!

1 75195

:tick-60s!

[...]!

libc.so.1`__priocntlset+0xa!

libc.so.1`getparam+0x83!

libc.so.1`pthread_getschedparam+0x3c!

libc.so.1`pthread_setschedprio+0x1f!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x9ab!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

4884!

mysqld`_Z13add_to_statusP17system_status_varS0_+0x47!

mysqld`_Z22calc_sum_of_all_statusP17system_status_var+0x67!

mysqld`_Z16dispatch_command19enum_server_commandP3THDPcj+0x1222!

mysqld`_Z10do_commandP3THD+0x198!

mysqld`handle_one_connection+0x1a6!

libc.so.1`_thrp_setup+0x8d!

libc.so.1`_lwp_start!

5530!

Despite

the

terse

output,

I

elided

over

500,000

lines

Here

is

what

all

the

output

looks

like…

slide 10:

slide 11:

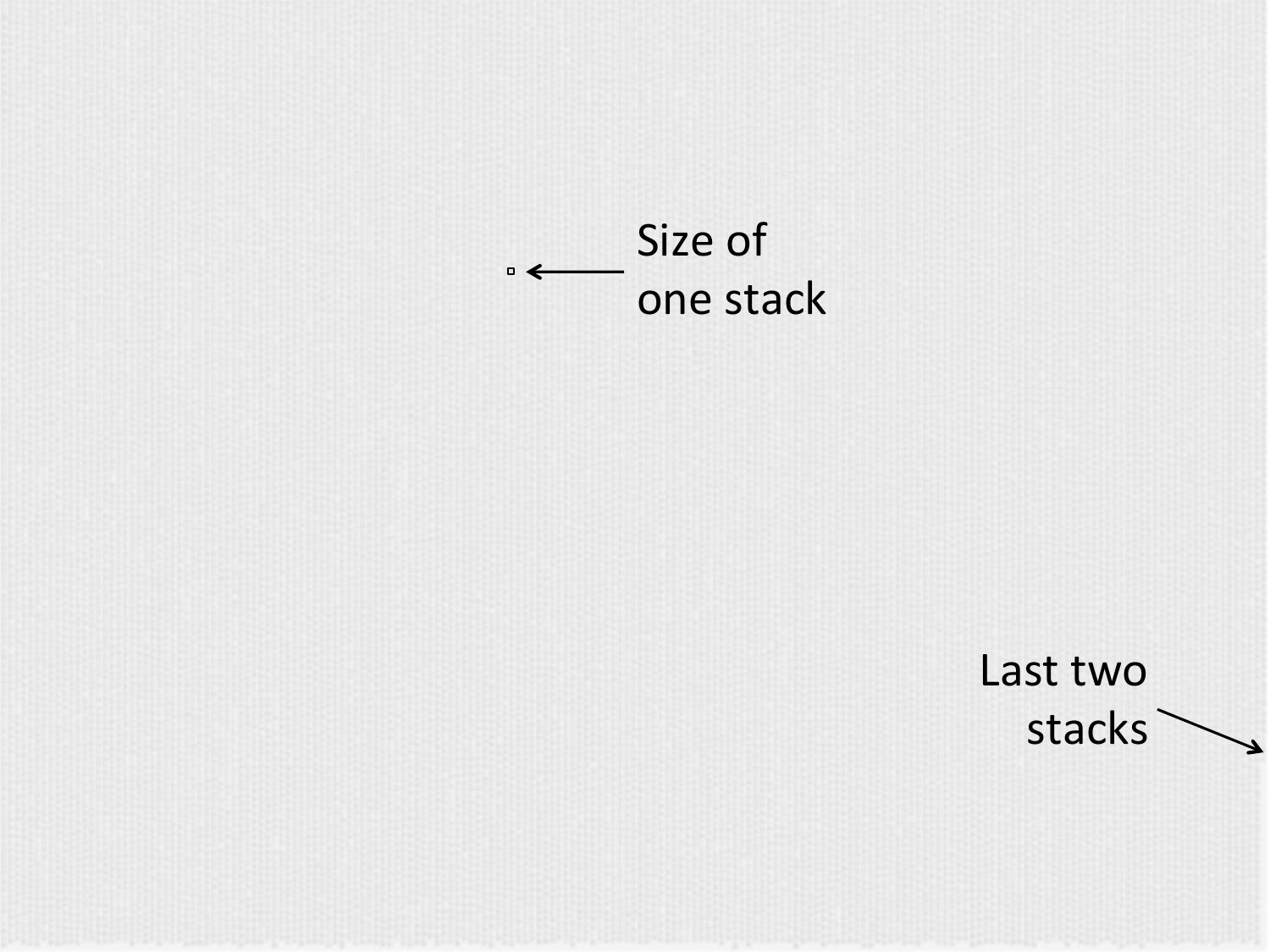

Size

of

one

stack

Last

two

stacks

slide 12:

These

are

just

the

unique

stacks.

I

have

to

compare

this

grey

featureless

square,

with

a

grey

square

from

the

other

host,

and

explain

the

30%

CPU

difference.

And

I

need

to

do

this

by

Friday.

slide 13:

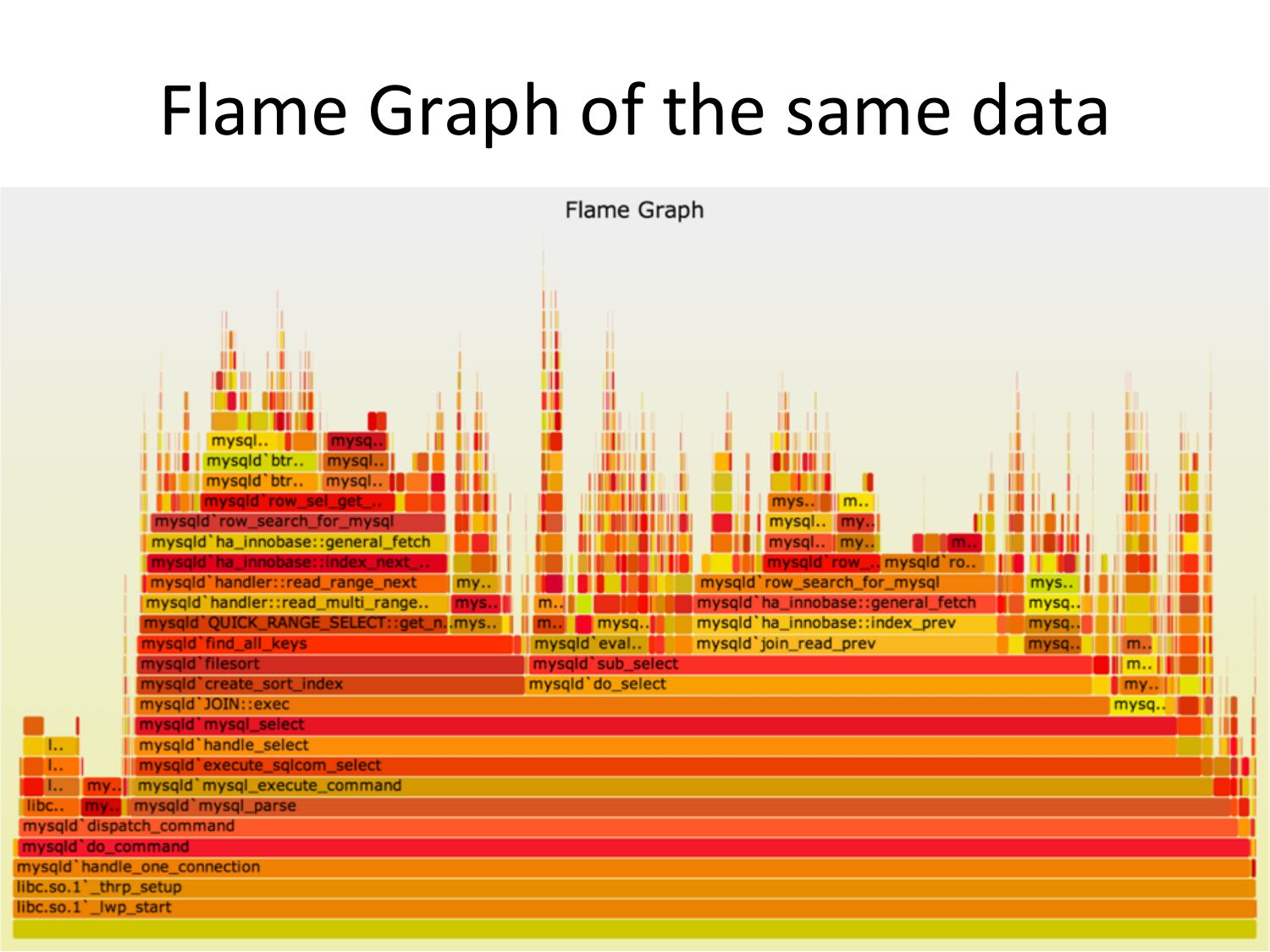

Flame

Graph

of

the

same

data

slide 14:

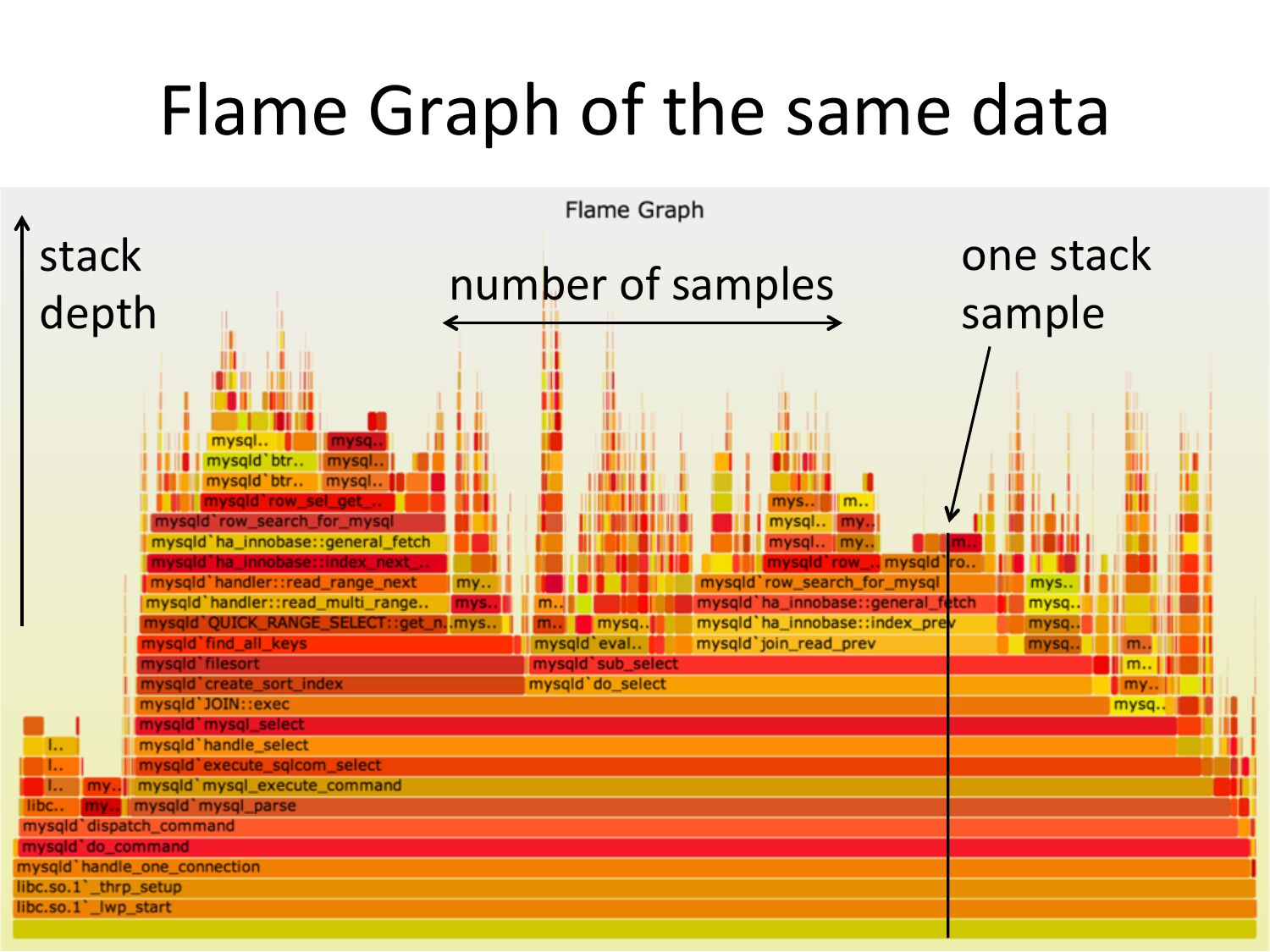

Flame

Graph

of

the

same

data

stack

depth

number

of

samples

one

stack

sample

slide 15:

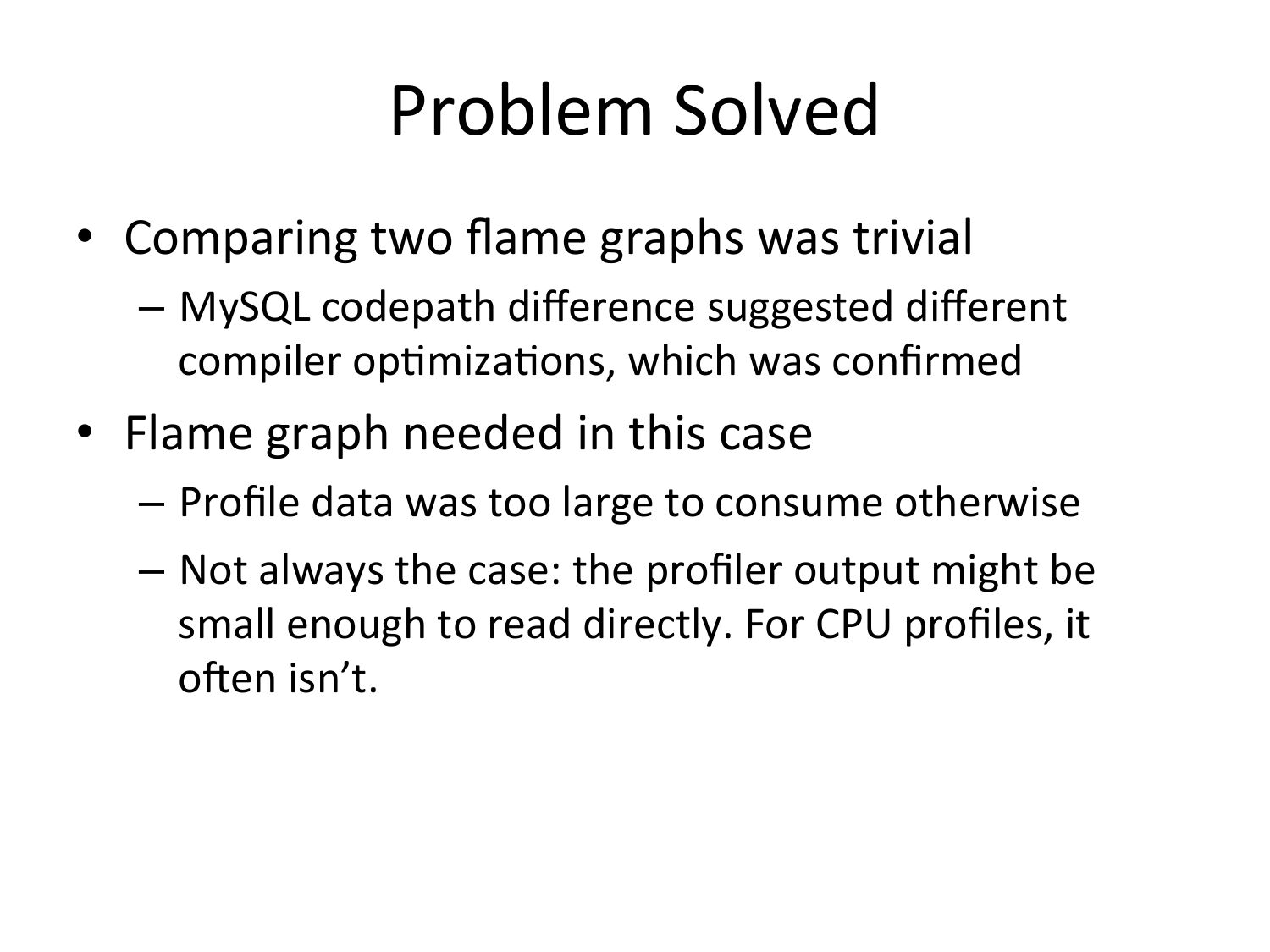

Problem

Solved

• Comparing

two

flame

graphs

was

trivial

– MySQL

codepath

difference

suggested

different

compiler

op=miza=ons,

which

was

confirmed

• Flame

graph

needed

in

this

case

– Profile

data

was

too

large

to

consume

otherwise

– Not

always

the

case:

the

profiler

output

might

be

small

enough

to

read

directly.

For

CPU

profiles,

it

ofen

isn’t.

slide 16:

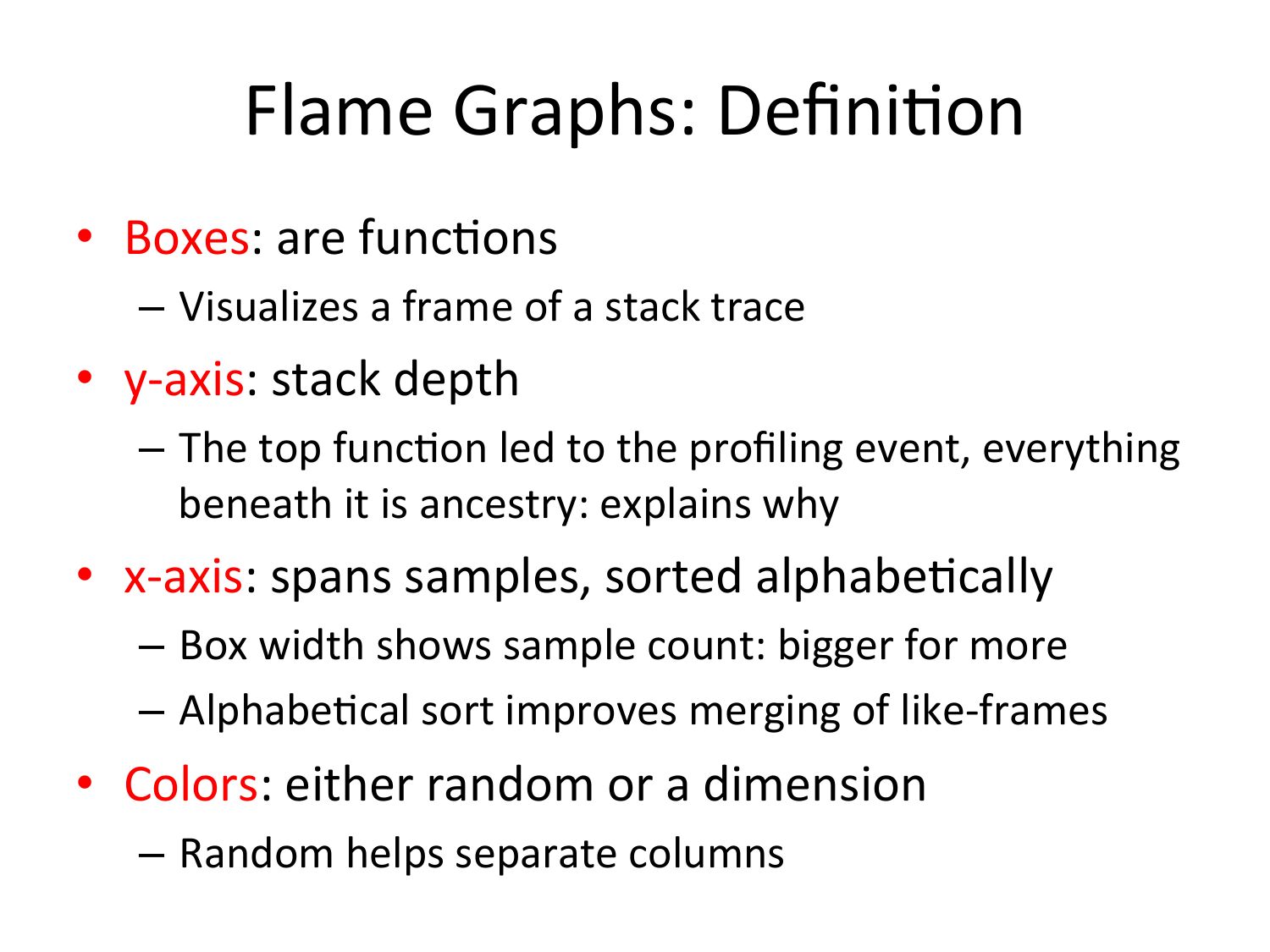

Flame

Graphs:

Defini=on

• Boxes:

are

func=ons

– Visualizes

a

frame

of

a

stack

trace

• y-‐axis:

stack

depth

– The

top

func=on

led

to

the

profiling

event,

everything

beneath

it

is

ancestry:

explains

why

• x-‐axis:

spans

samples,

sorted

alphabe=cally

– Box

width

shows

sample

count:

bigger

for

more

– Alphabe=cal

sort

improves

merging

of

like-‐frames

• Colors:

either

random

or

a

dimension

– Random

helps

separate

columns

slide 17:

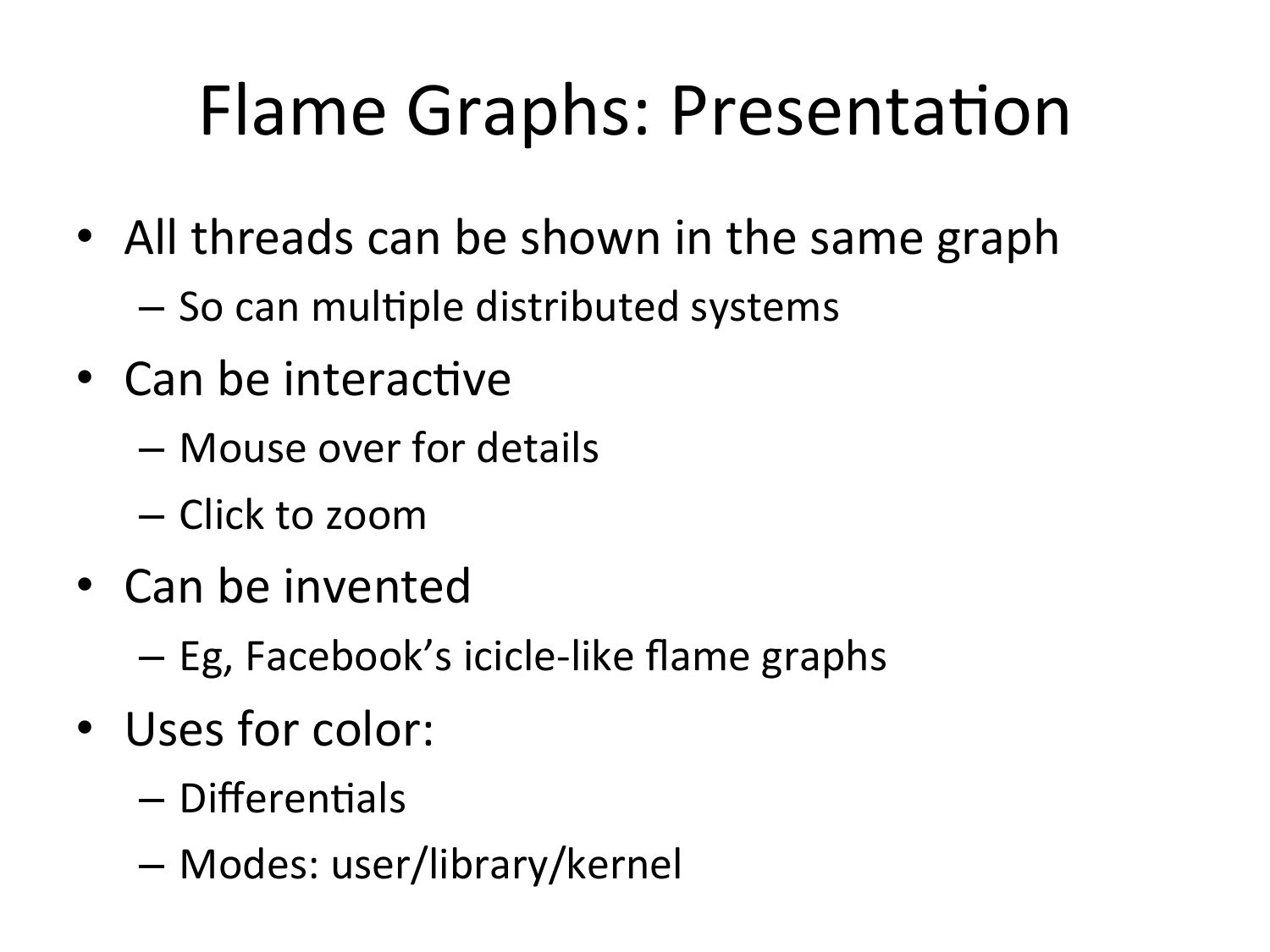

Flame

Graphs:

Presenta=on

• All

threads

can

be

shown

in

the

same

graph

– So

can

mul=ple

distributed

systems

• Can

be

interac=ve

– Mouse

over

for

details

– Click

to

zoom

• Can

be

invented

– Eg,

Facebook’s

icicle-‐like

flame

graphs

• Uses

for

color:

– Differen=als

– Modes:

user/library/kernel

slide 18:

2.

Genera=on

slide 19:

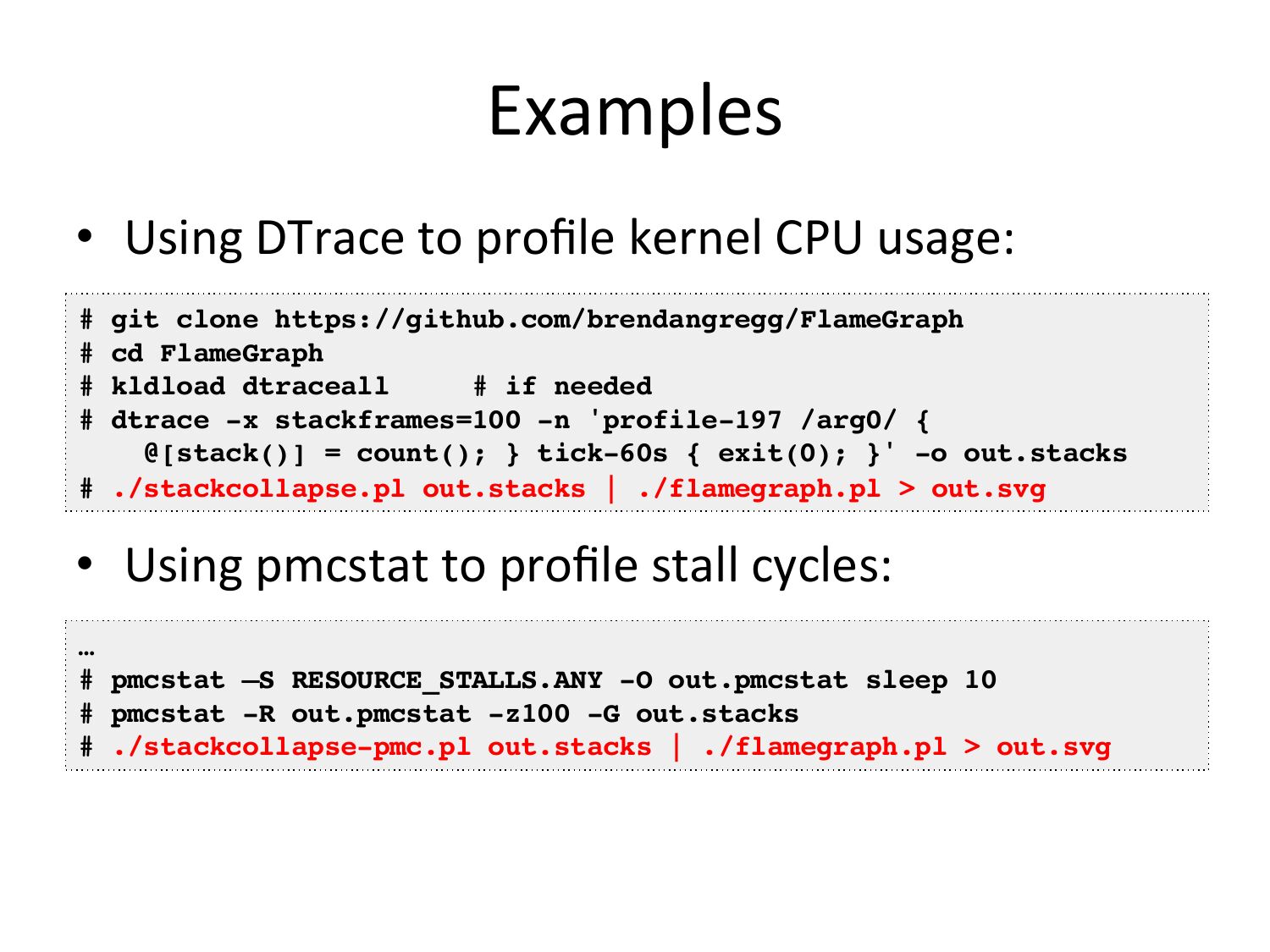

Examples

• Using

DTrace

to

profile

kernel

CPU

usage:

git clone https://github.com/brendangregg/FlameGraph!

## cd

FlameGraph!

# kldload dtraceall

# if needed!

# dtrace -x stackframes=100 -n 'profile-197 /arg0/ {!

@[stack()] = count(); } tick-60s { exit(0); }' -o out.stacks!

# ./stackcollapse.pl out.stacks | ./flamegraph.pl >gt; out.svg!

• Using

pmcstat

to

profile

stall

cycles:

… !

# pmcstat –S RESOURCE_STALLS.ANY -O out.pmcstat sleep 10!

# pmcstat -R out.pmcstat -z100 -G out.stacks!

# ./stackcollapse-pmc.pl out.stacks | ./flamegraph.pl >gt; out.svg!

slide 20:

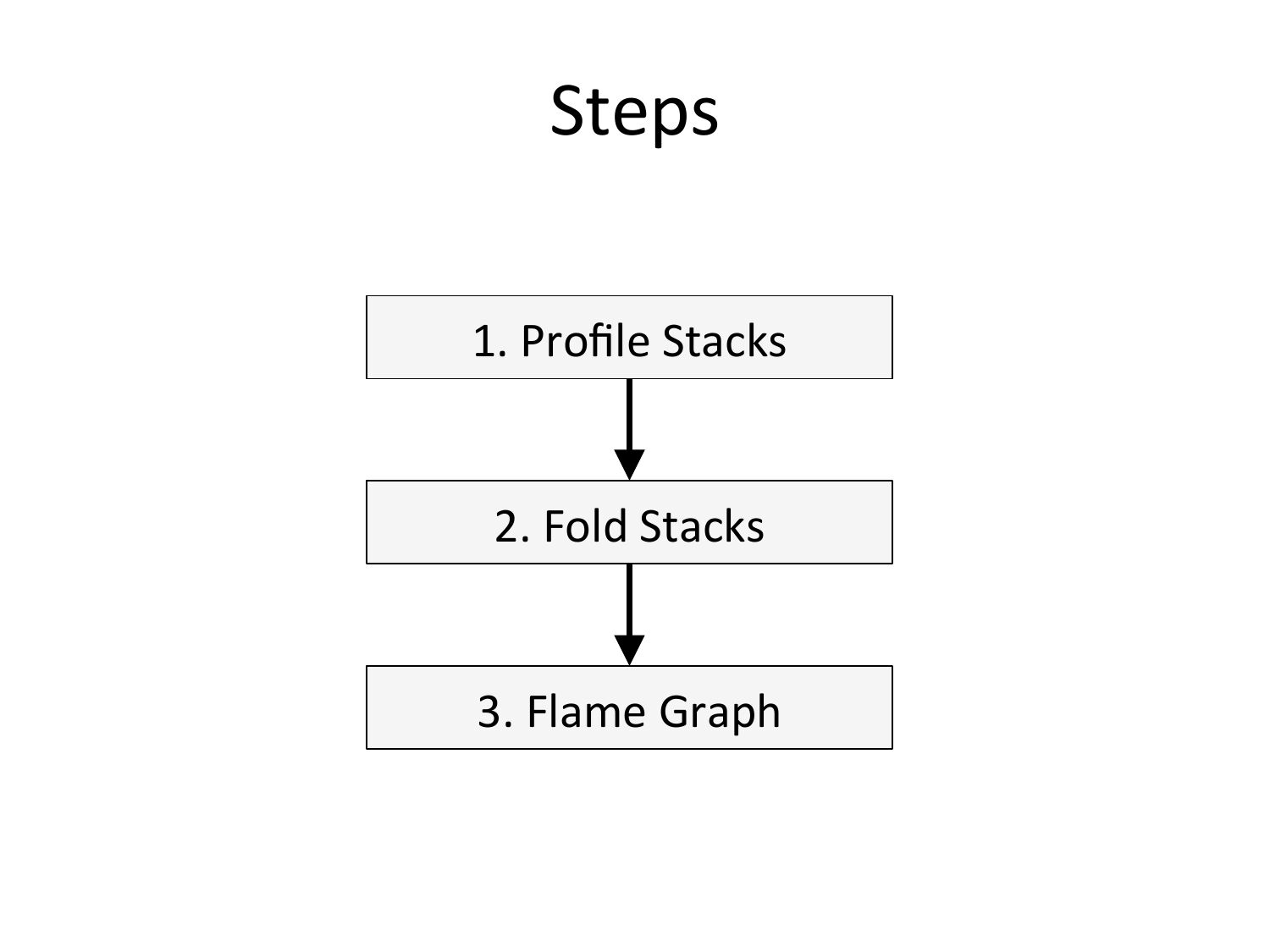

Steps

1.

Profile

Stacks

2.

Fold

Stacks

3.

Flame

Graph

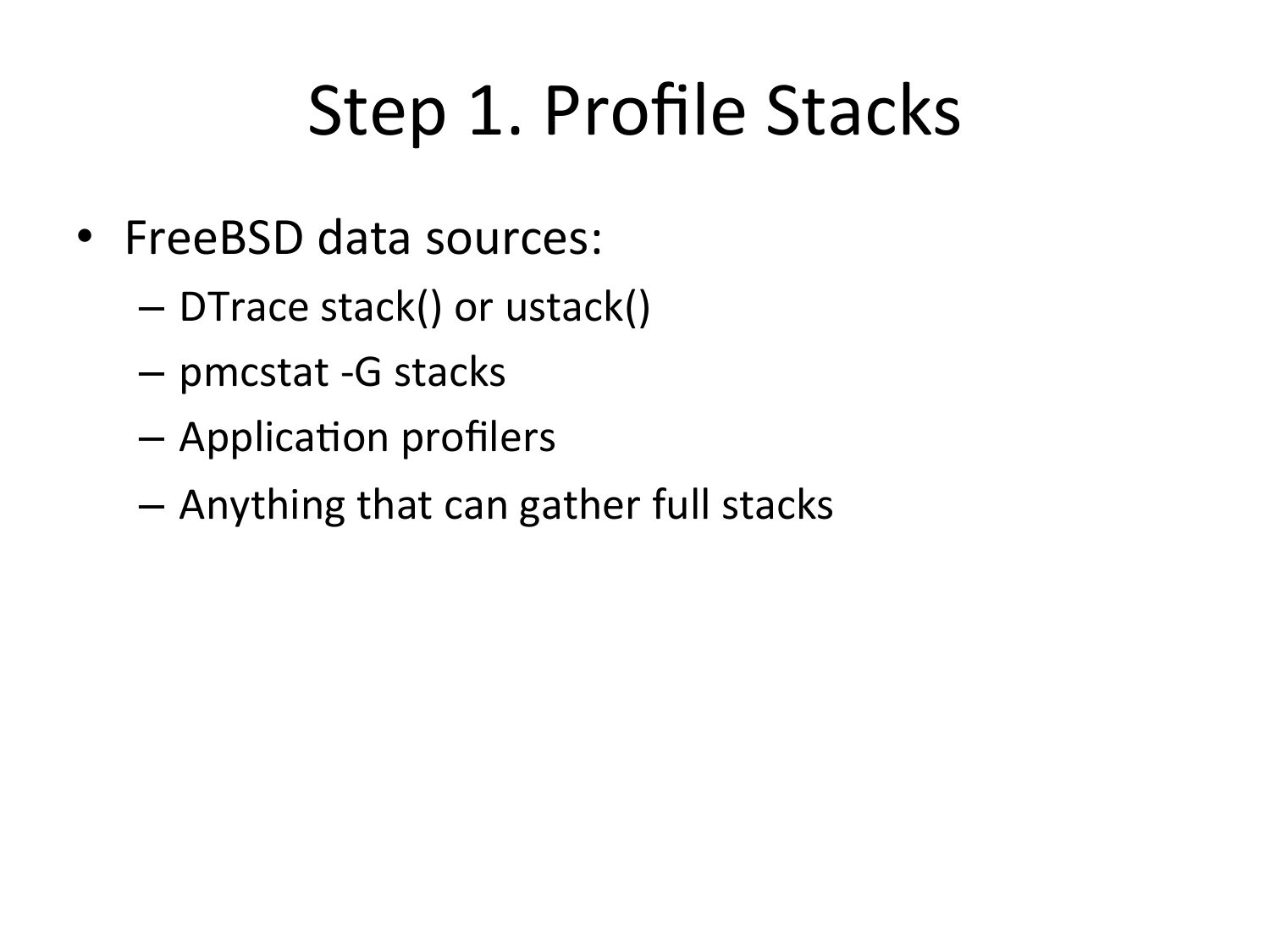

slide 21:

Step

1.

Profile

Stacks

• FreeBSD

data

sources:

– DTrace

stack()

or

ustack()

– pmcstat

-‐G

stacks

– Applica=on

profilers

– Anything

that

can

gather

full

stacks

slide 22:

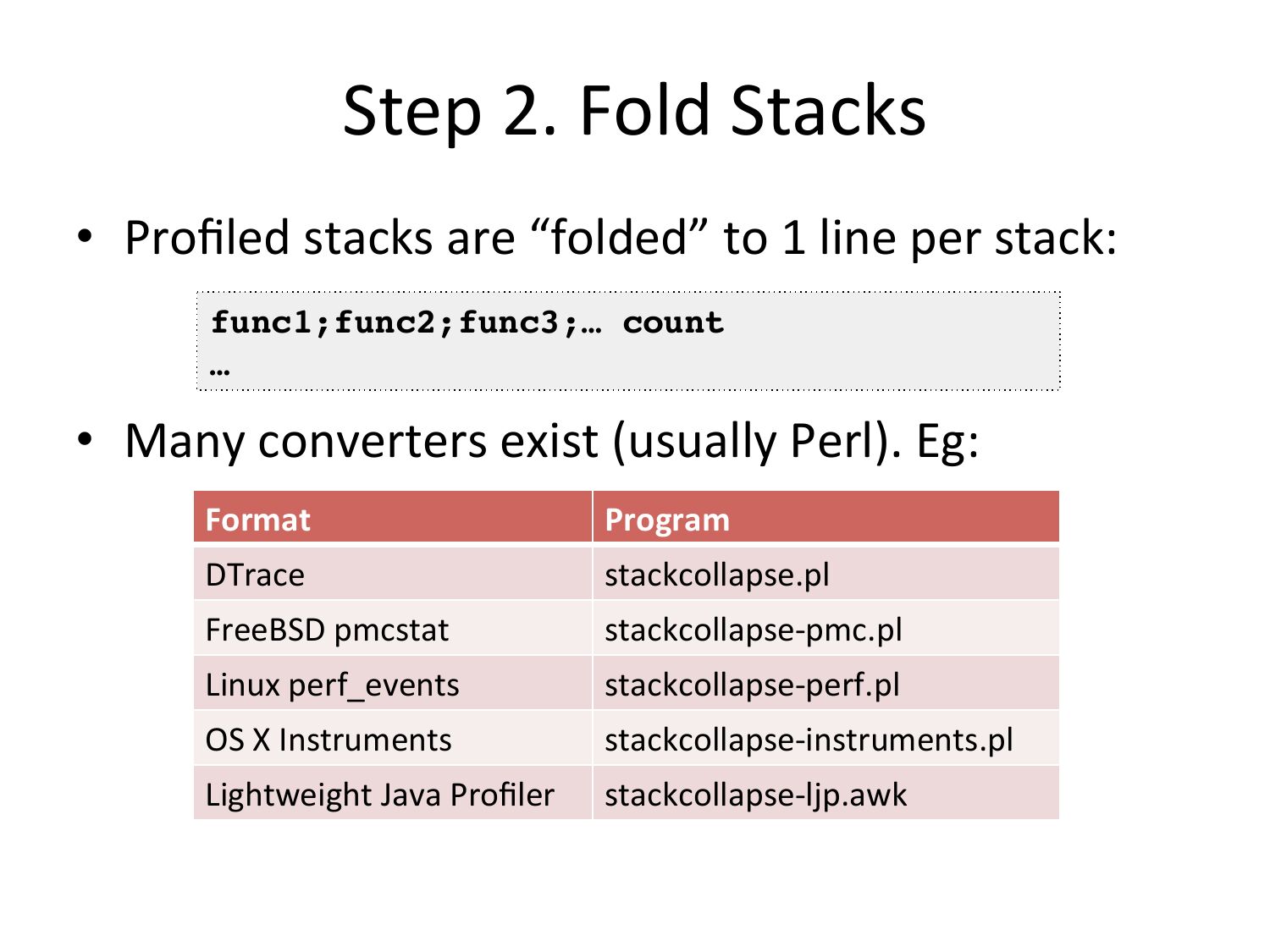

Step

2.

Fold

Stacks

• Profiled

stacks

are

“folded”

to

1

line

per

stack:

func1;func2;func3;… count!

• Many

converters

exist

(usually

Perl).

Eg:

Format

Program

DTrace

stackcollapse.pl

FreeBSD

pmcstat

stackcollapse-‐pmc.pl

Linux

perf_events

stackcollapse-‐perf.pl

OS

X

Instruments

stackcollapse-‐instruments.pl

Lightweight

Java

Profiler

stackcollapse-‐ljp.awk

slide 23:

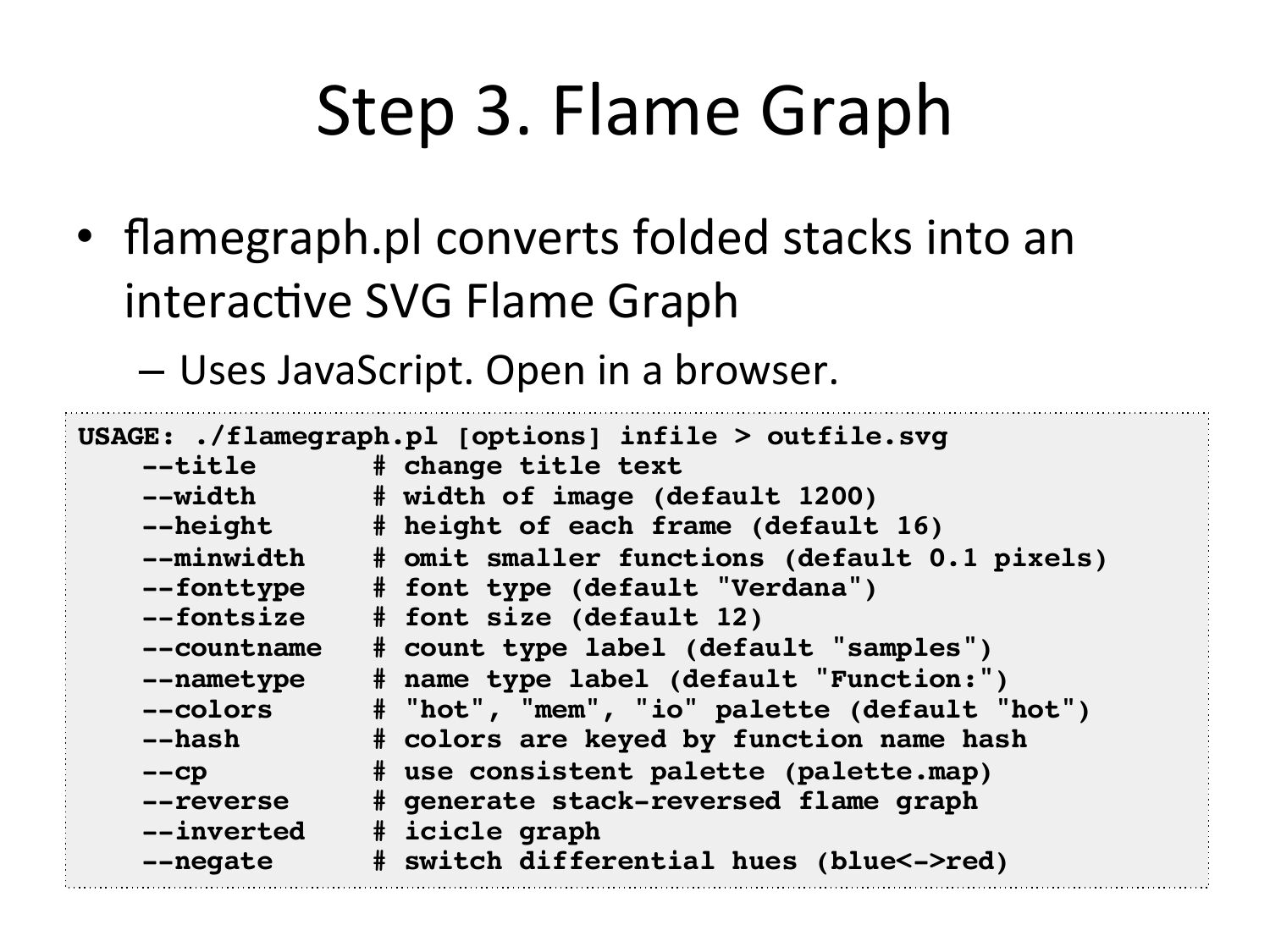

Step

3.

Flame

Graph

• flamegraph.pl

converts

folded

stacks

into

an

interac=ve

SVG

Flame

Graph

– Uses

JavaScript.

Open

in

a

browser.

USAGE: ./flamegraph.pl [options] infile >gt; outfile.svg!

!--title

# change title text!

!--width

# width of image (default 1200)!

!--height

# height of each frame (default 16)!

!--minwidth

# omit smaller functions (default 0.1 pixels)!

!--fonttype

# font type (default "Verdana")!

!--fontsize

# font size (default 12)!

!--countname

# count type label (default "samples")!

!--nametype

# name type label (default "Function:")!

!--colors

# "hot", "mem", "io" palette (default "hot")!

!--hash

# colors are keyed by function name hash!

!--cp

# use consistent palette (palette.map)!

!--reverse

# generate stack-reversed flame graph!

!--inverted

# icicle graph!

!--negate

# switch differential hues (bluegt;red)!

slide 24:

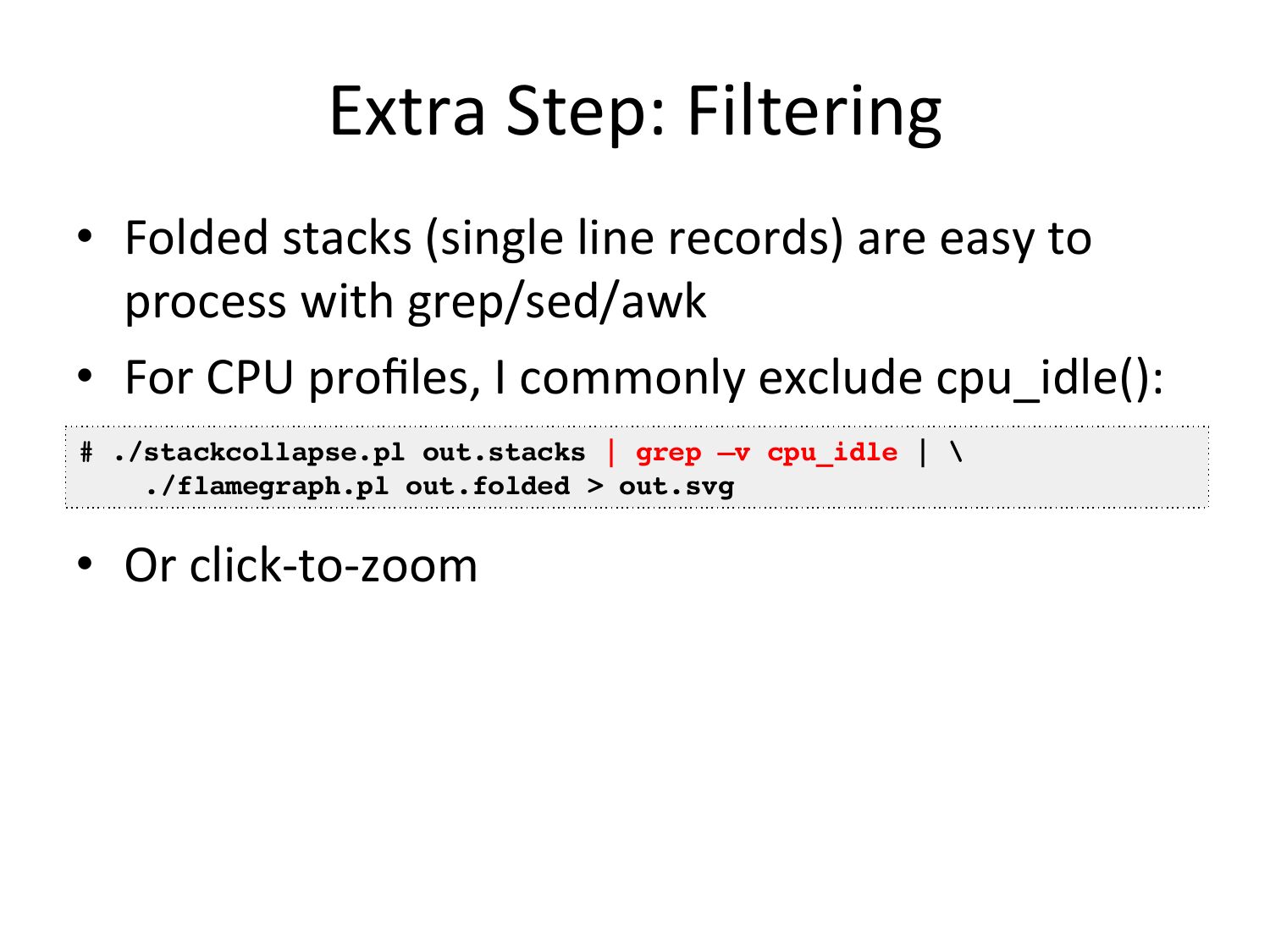

Extra

Step:

Filtering

• Folded

stacks

(single

line

records)

are

easy

to

process

with

grep/sed/awk

• For

CPU

profiles,

I

commonly

exclude

cpu_idle():

# ./stackcollapse.pl out.stacks | grep –v cpu_idle | \!

./flamegraph.pl out.folded >gt; out.svg!

• Or

click-‐to-‐zoom

slide 25:

3.

CPU

slide 26:

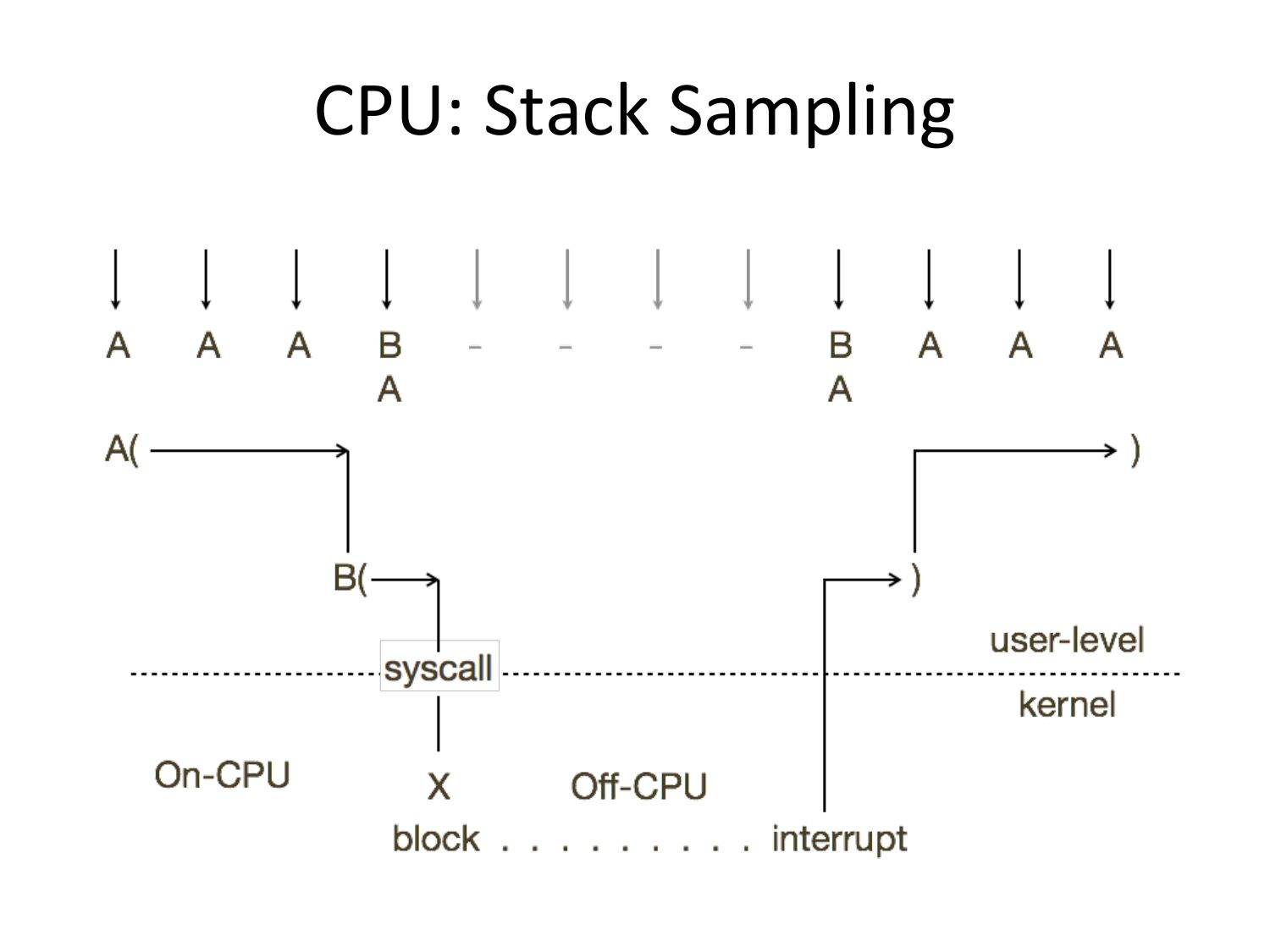

CPU:

Stack

Sampling

slide 27:

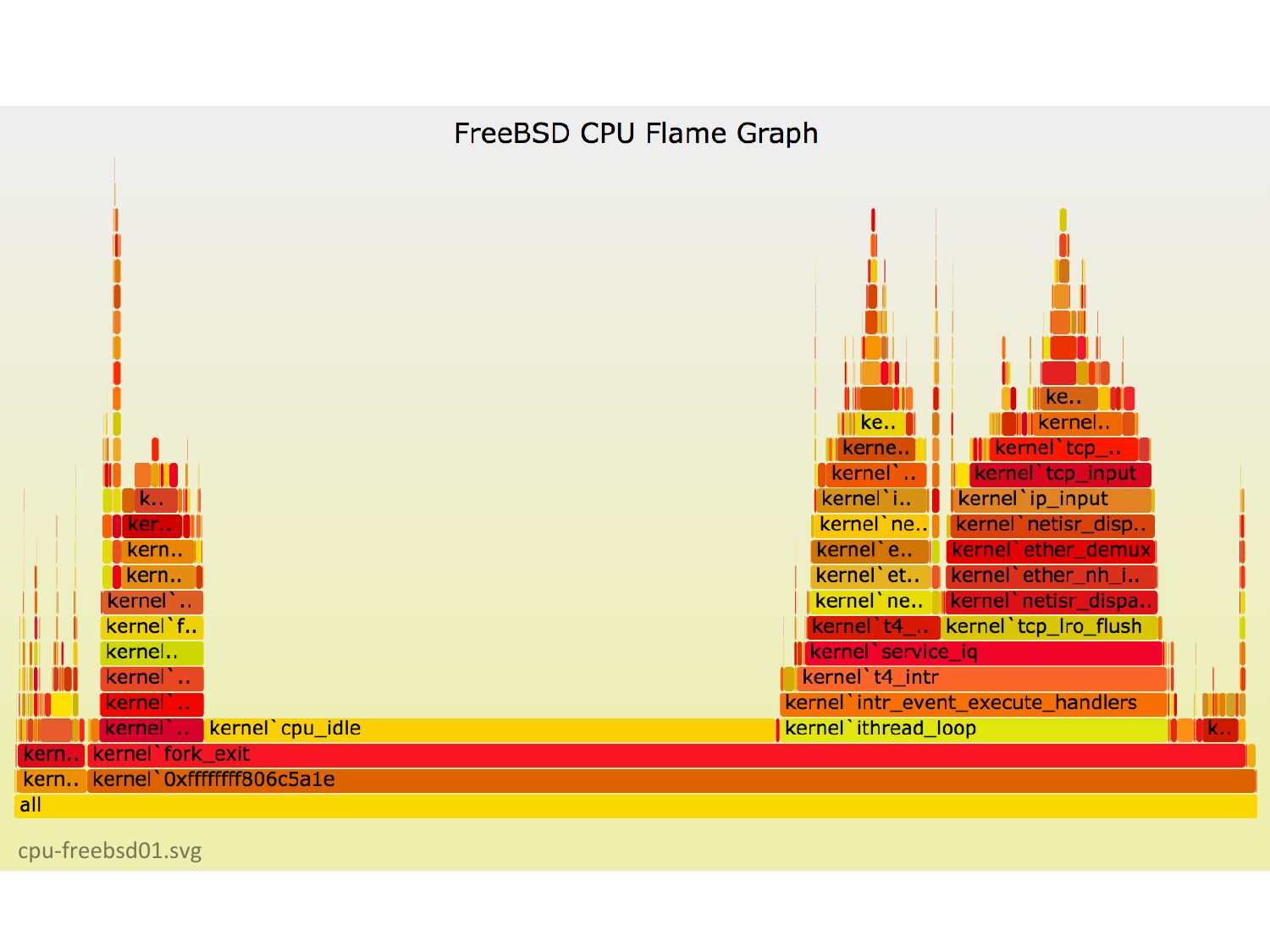

cpu-‐freebsd01.svg

slide 28:

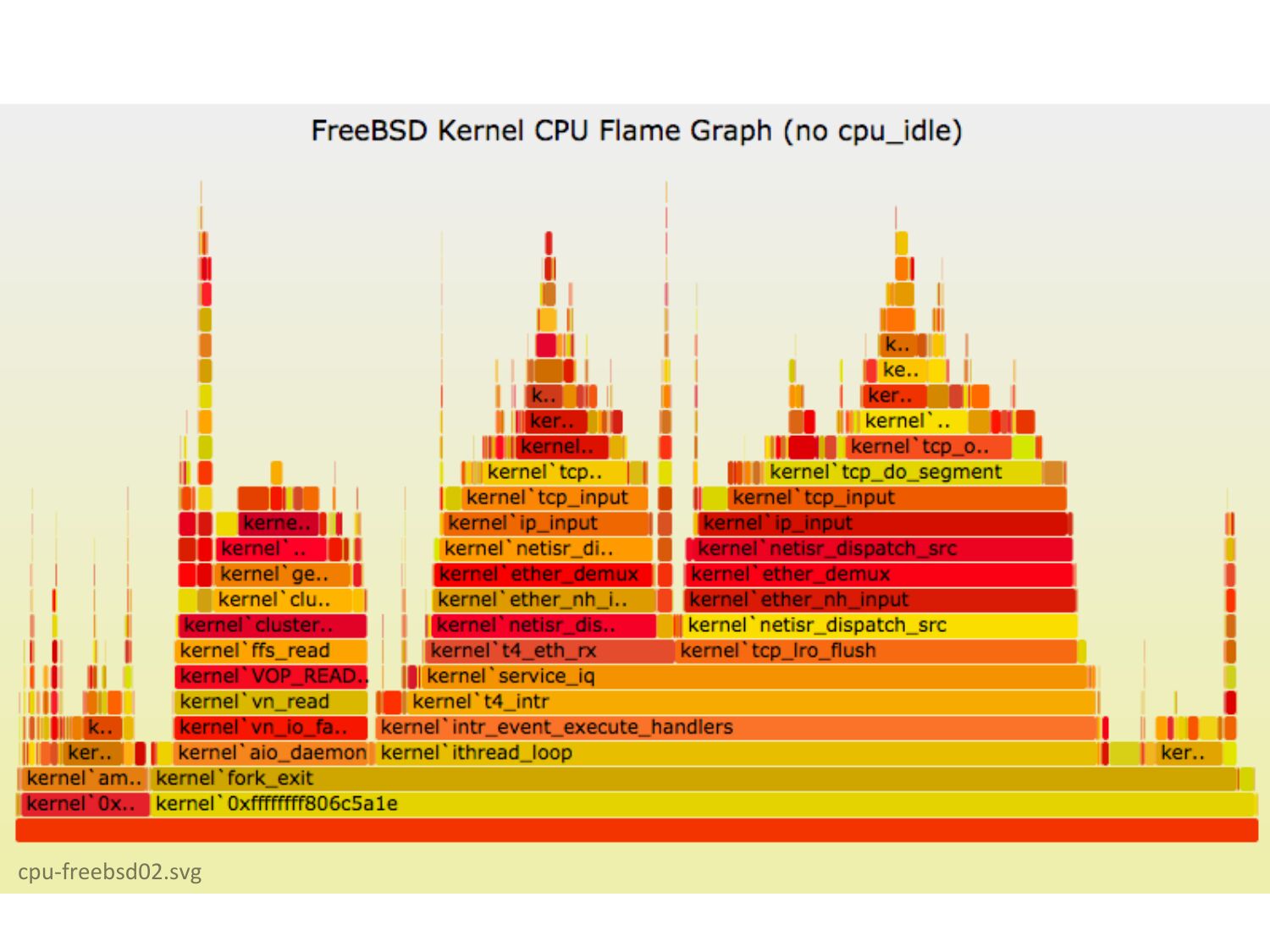

cpu-‐freebsd02.svg

slide 29:

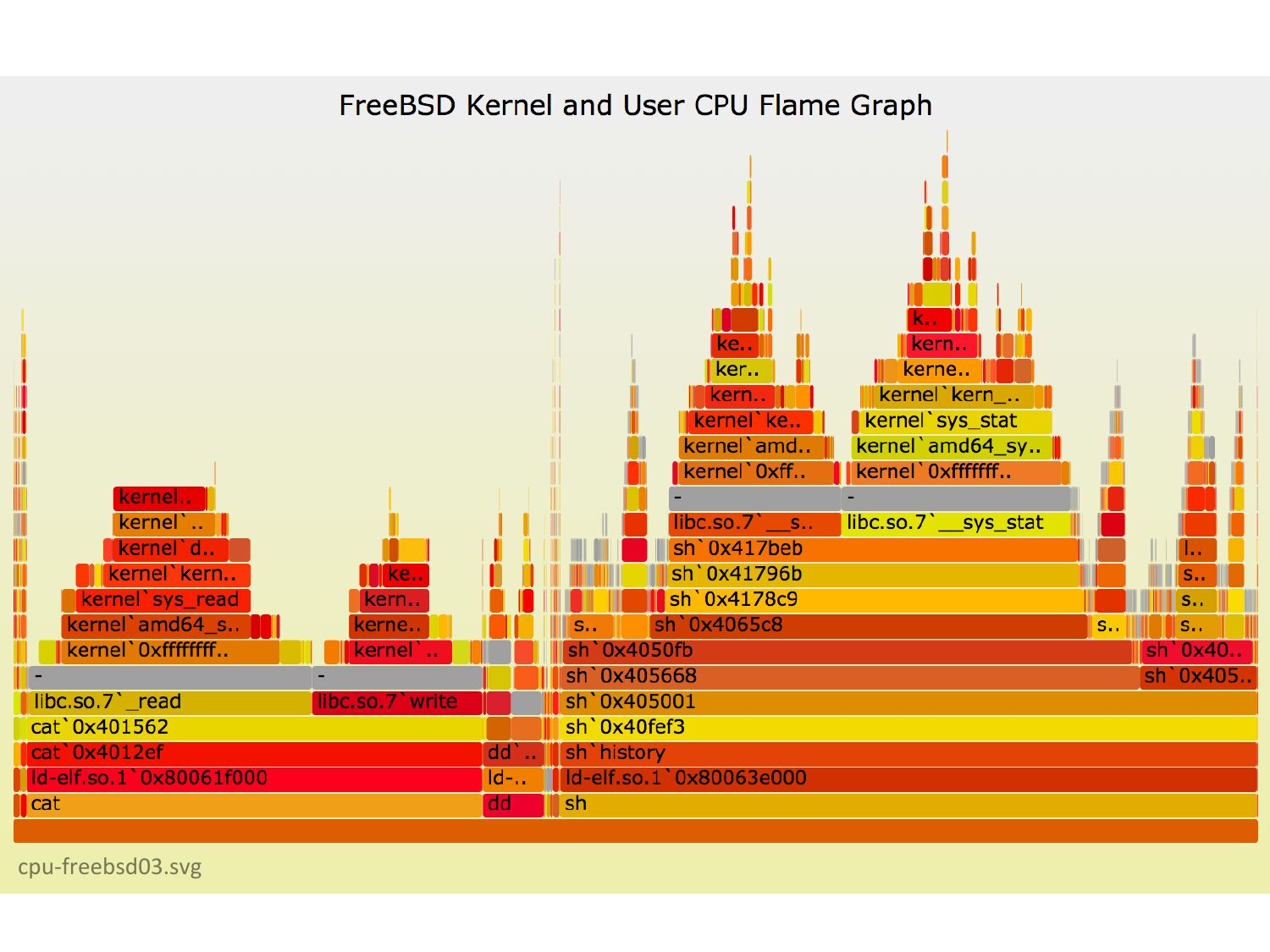

cpu-‐freebsd03.svg

slide 30:

DEMO

slide 31:

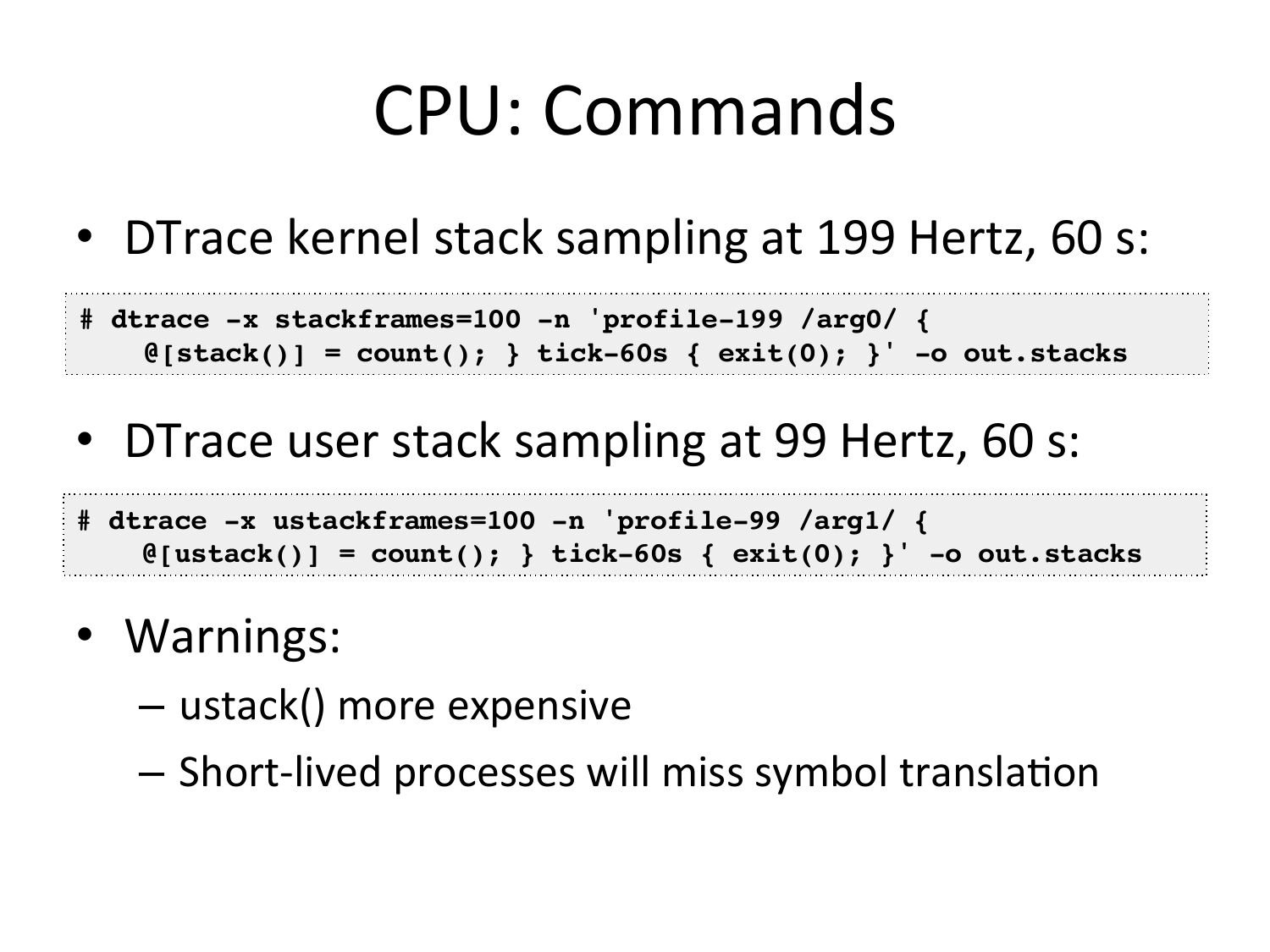

CPU:

Commands

• DTrace

kernel

stack

sampling

at

199

Hertz,

60

s:

# dtrace -x stackframes=100 -n 'profile-199 /arg0/ {!

@[stack()] = count(); } tick-60s { exit(0); }' -o out.stacks!

• DTrace

user

stack

sampling

at

99

Hertz,

60

s:

# dtrace -x ustackframes=100 -n 'profile-99 /arg1/ {!

@[ustack()] = count(); } tick-60s { exit(0); }' -o out.stacks!

• Warnings:

– ustack()

more

expensive

– Short-‐lived

processes

will

miss

symbol

transla=on

slide 32:

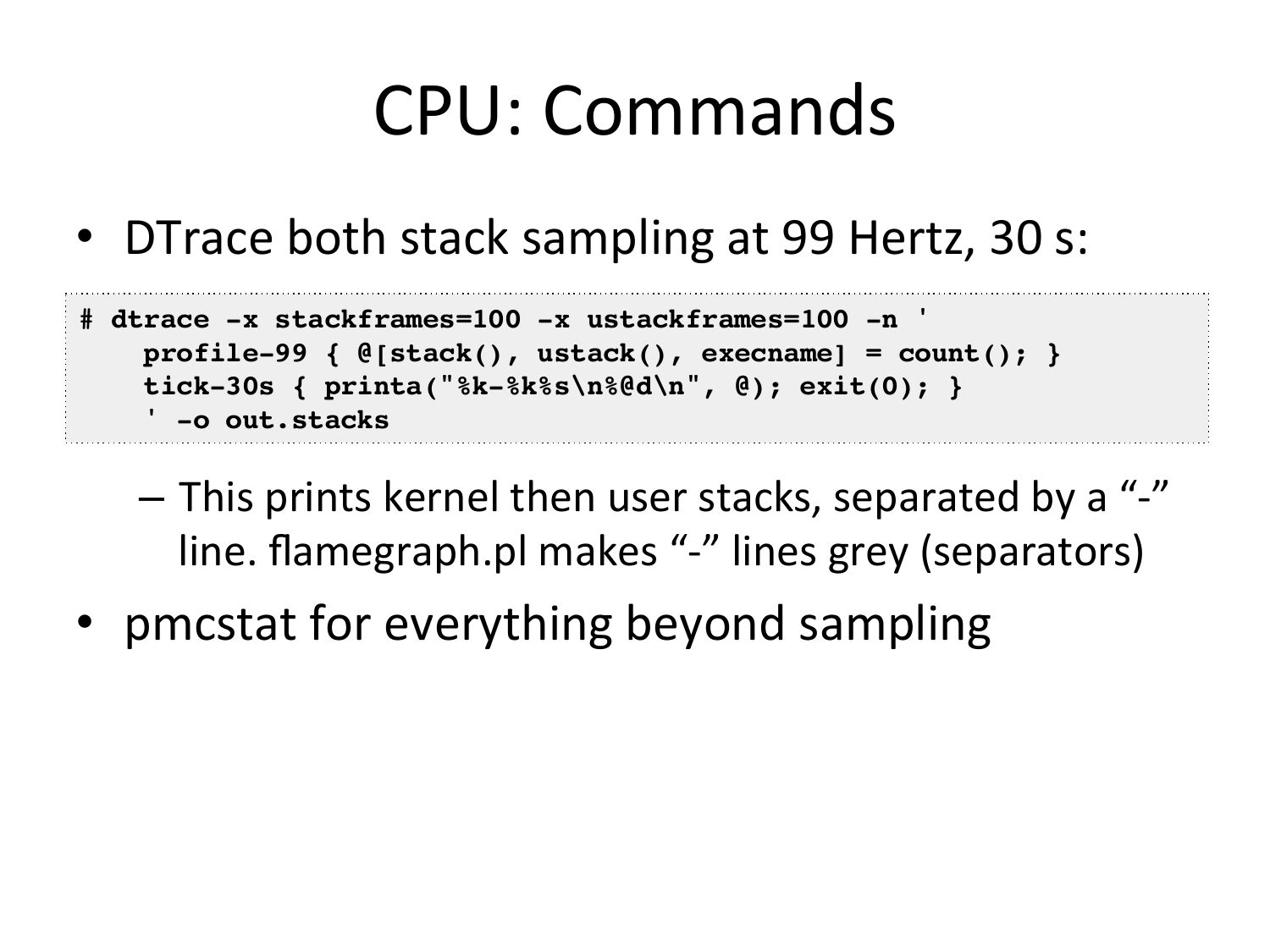

CPU:

Commands

• DTrace

both

stack

sampling

at

99

Hertz,

30

s:

# dtrace -x stackframes=100 -x ustackframes=100 -n '!

profile-99 { @[stack(), ustack(), execname] = count(); }!

tick-30s { printa("%k-%k%s\n%@d\n", @); exit(0); }!

' -o out.stacks!

– This

prints

kernel

then

user

stacks,

separated

by

a

“-‐”

line.

flamegraph.pl

makes

“-‐”

lines

grey

(separators)

• pmcstat

for

everything

beyond

sampling

slide 33:

4.

Memory

slide 34:

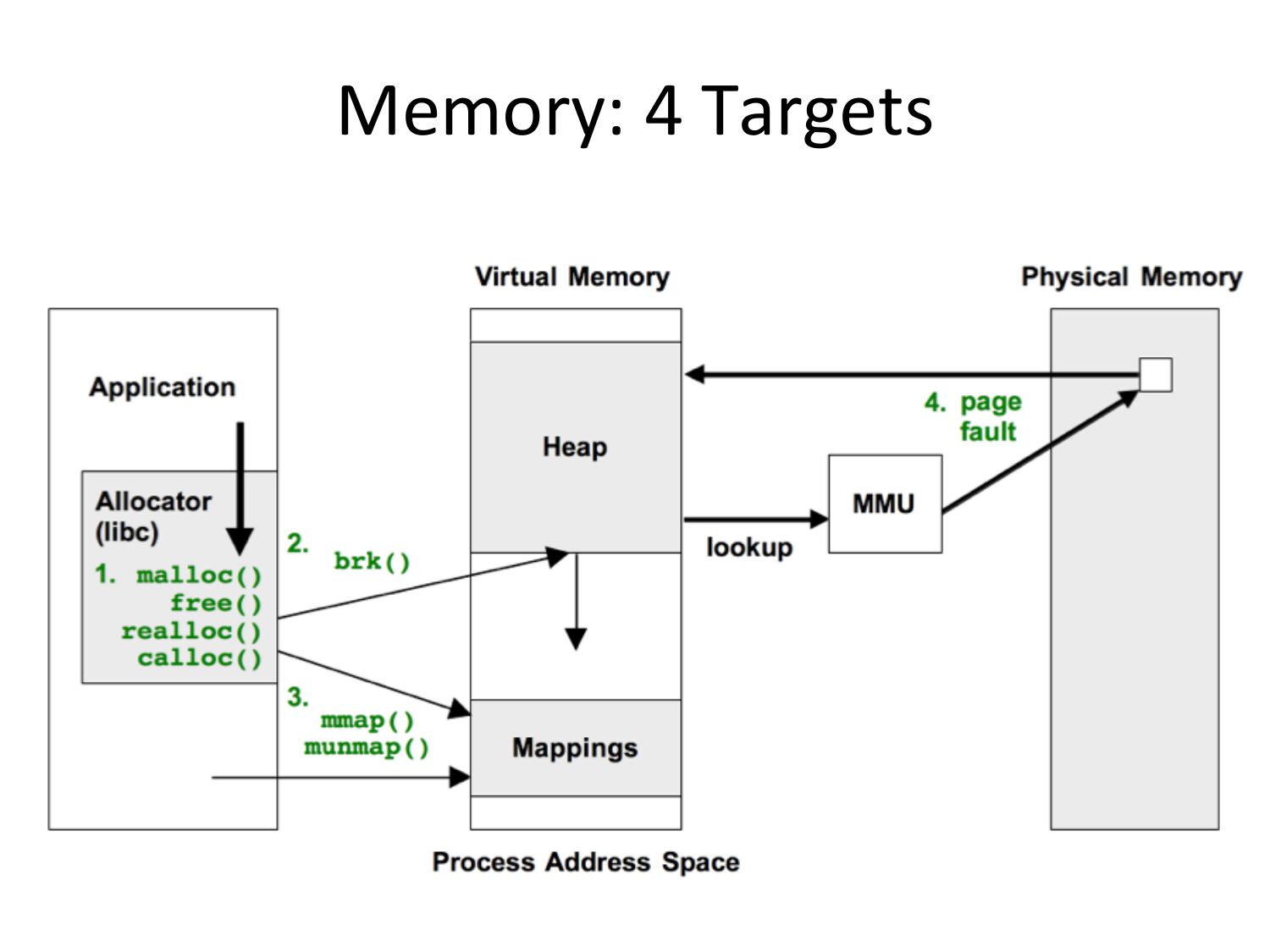

Memory:

4

Targets

slide 35:

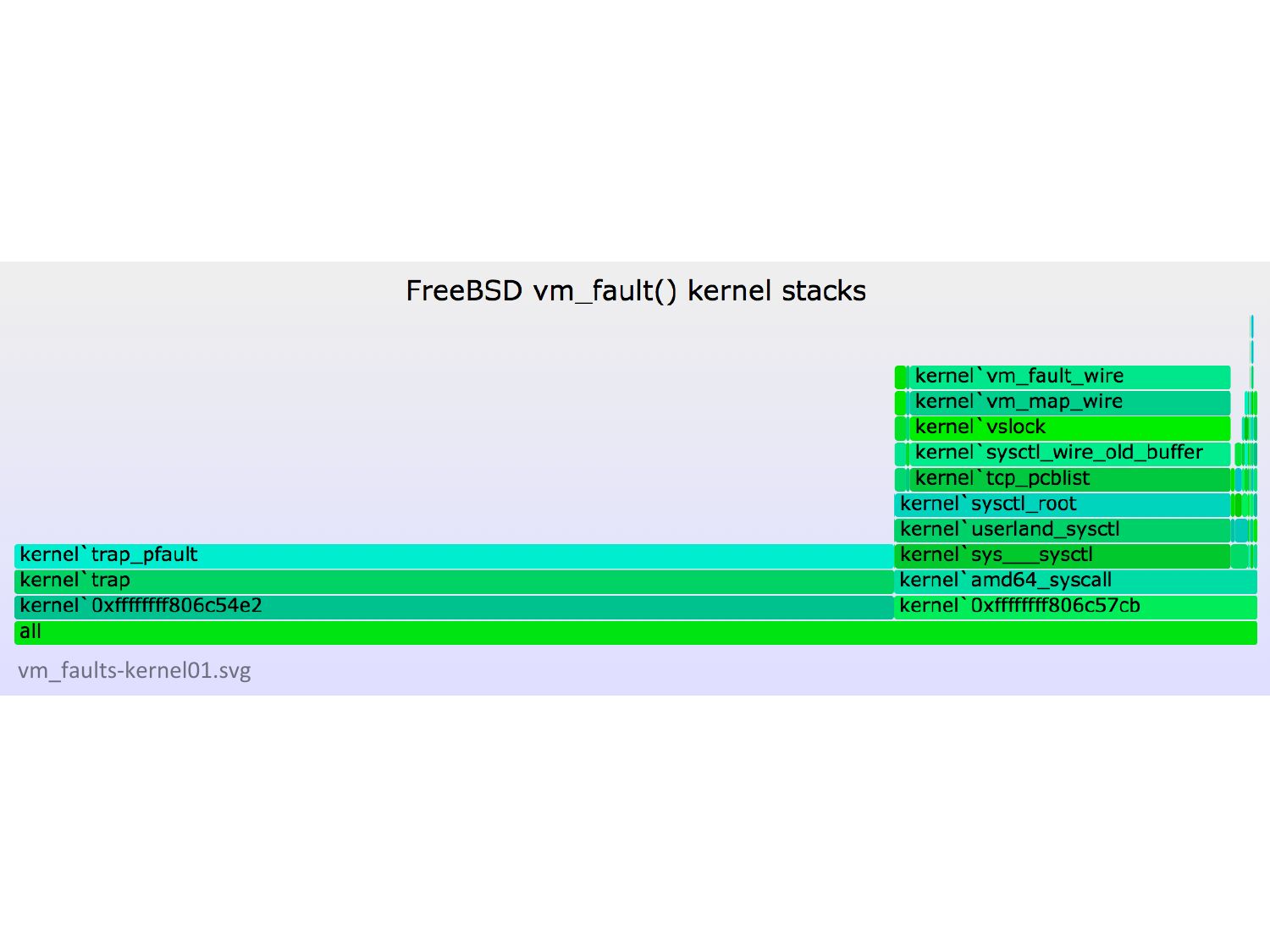

vm_faults-‐kernel01.svg

slide 36:

DEMO

slide 37:

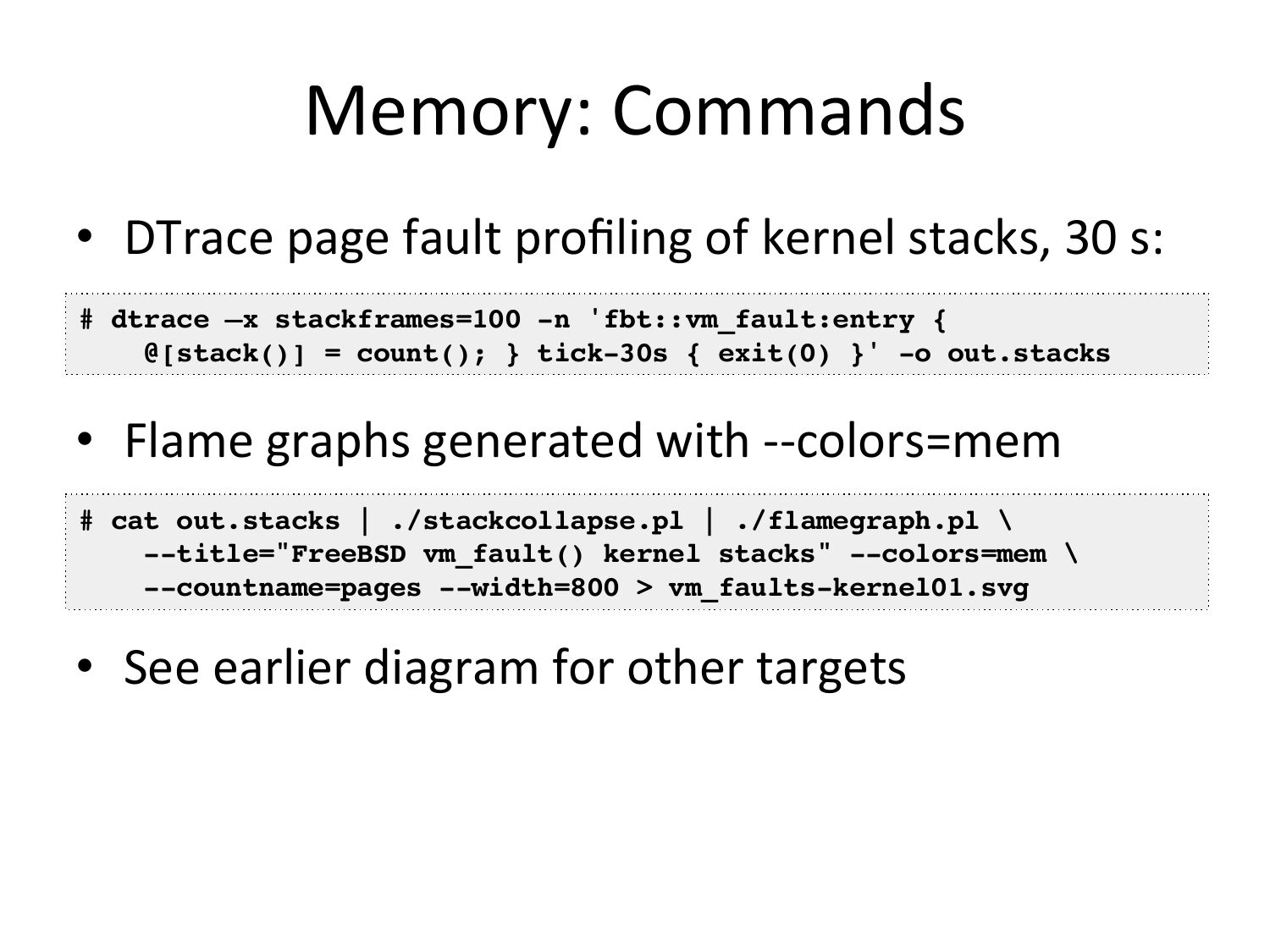

Memory:

Commands

• DTrace

page

fault

profiling

of

kernel

stacks,

30

s:

# dtrace –x stackframes=100 -n 'fbt::vm_fault:entry {!

@[stack()] = count(); } tick-30s { exit(0) }' -o out.stacks!

• Flame

graphs

generated

with

-‐-‐colors=mem

# cat out.stacks | ./stackcollapse.pl | ./flamegraph.pl \!

--title="FreeBSD vm_fault() kernel stacks" --colors=mem \!

--countname=pages --width=800 >gt; vm_faults-kernel01.svg!

• See

earlier

diagram

for

other

targets

slide 38:

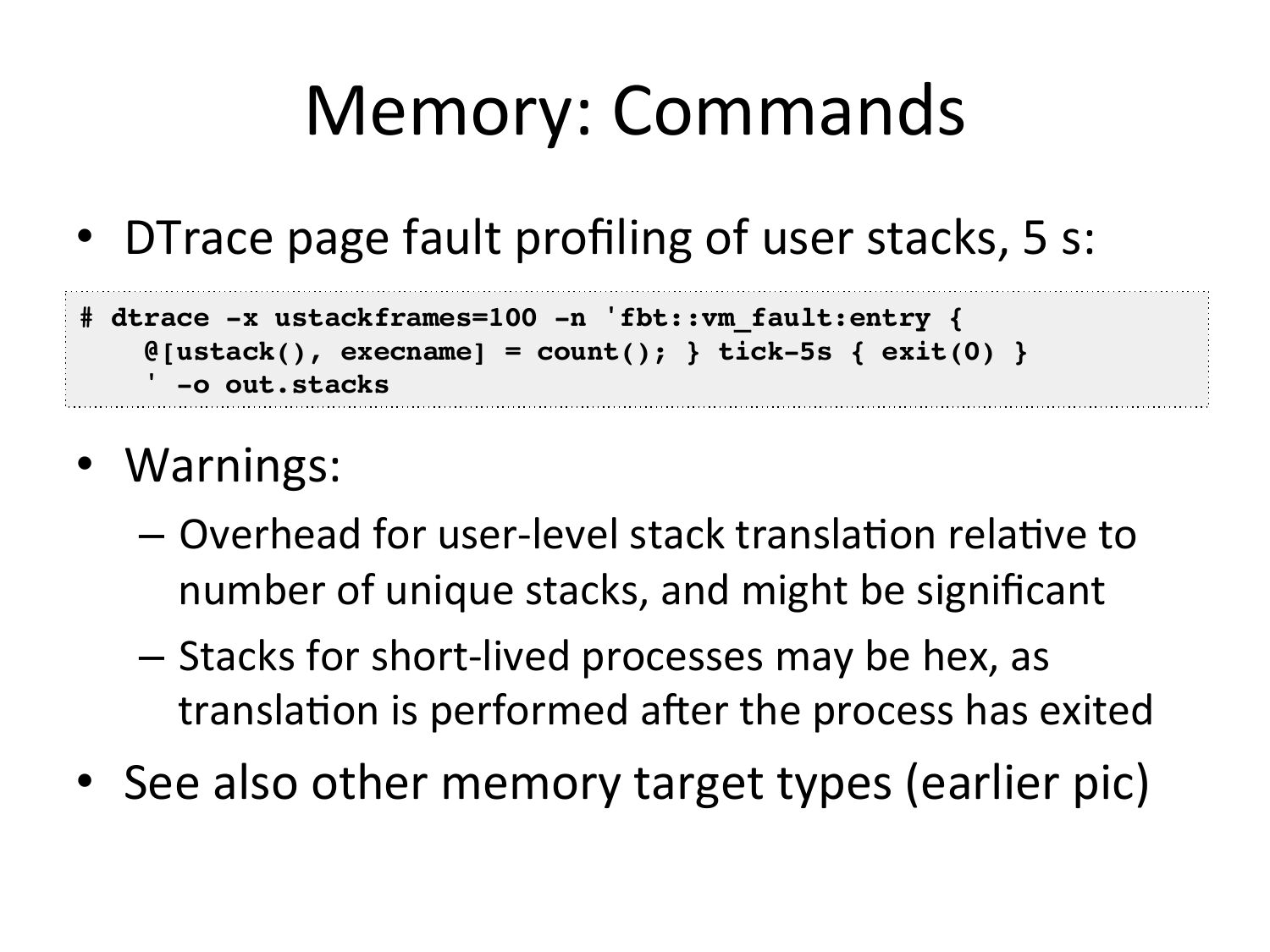

Memory:

Commands

• DTrace

page

fault

profiling

of

user

stacks,

5

s:

# dtrace -x ustackframes=100 -n 'fbt::vm_fault:entry {!

@[ustack(), execname] = count(); } tick-5s { exit(0) }!

' -o out.stacks!

• Warnings:

– Overhead

for

user-‐level

stack

transla=on

rela=ve

to

number

of

unique

stacks,

and

might

be

significant

– Stacks

for

short-‐lived

processes

may

be

hex,

as

transla=on

is

performed

afer

the

process

has

exited

• See

also

other

memory

target

types

(earlier

pic)

slide 39:

5.

Disk

I/O

slide 40:

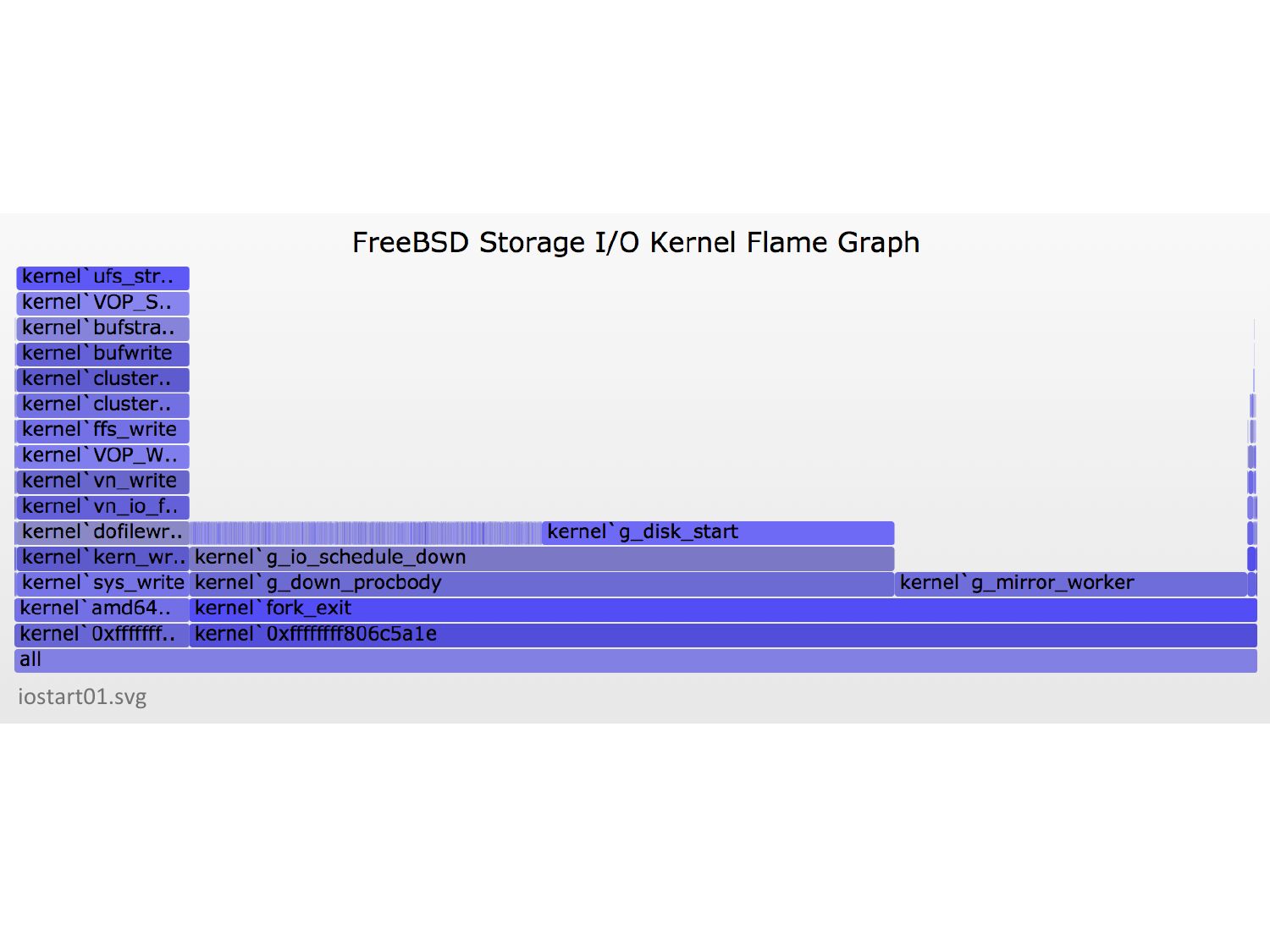

iostart01.svg

slide 41:

DEMO

slide 42:

Disk

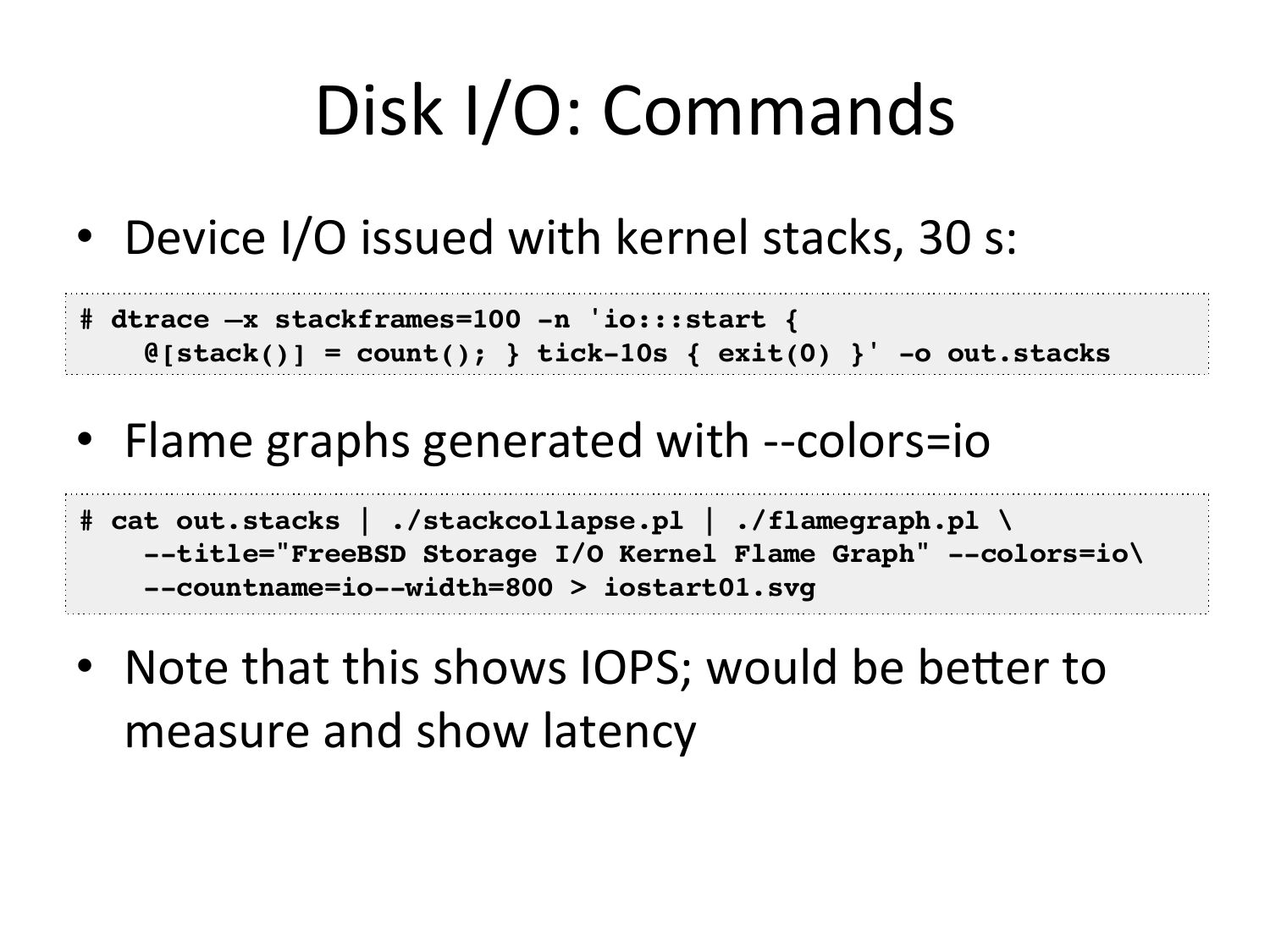

I/O:

Commands

• Device

I/O

issued

with

kernel

stacks,

30

s:

# dtrace –x stackframes=100 -n 'io:::start {!

@[stack()] = count(); } tick-10s { exit(0) }' -o out.stacks!

• Flame

graphs

generated

with

-‐-‐colors=io

# cat out.stacks | ./stackcollapse.pl | ./flamegraph.pl \!

--title="FreeBSD Storage I/O Kernel Flame Graph" --colors=io\!

--countname=io--width=800 >gt; iostart01.svg!

• Note

that

this

shows

IOPS;

would

be

beuer

to

measure

and

show

latency

slide 43:

6.

Off-‐CPU

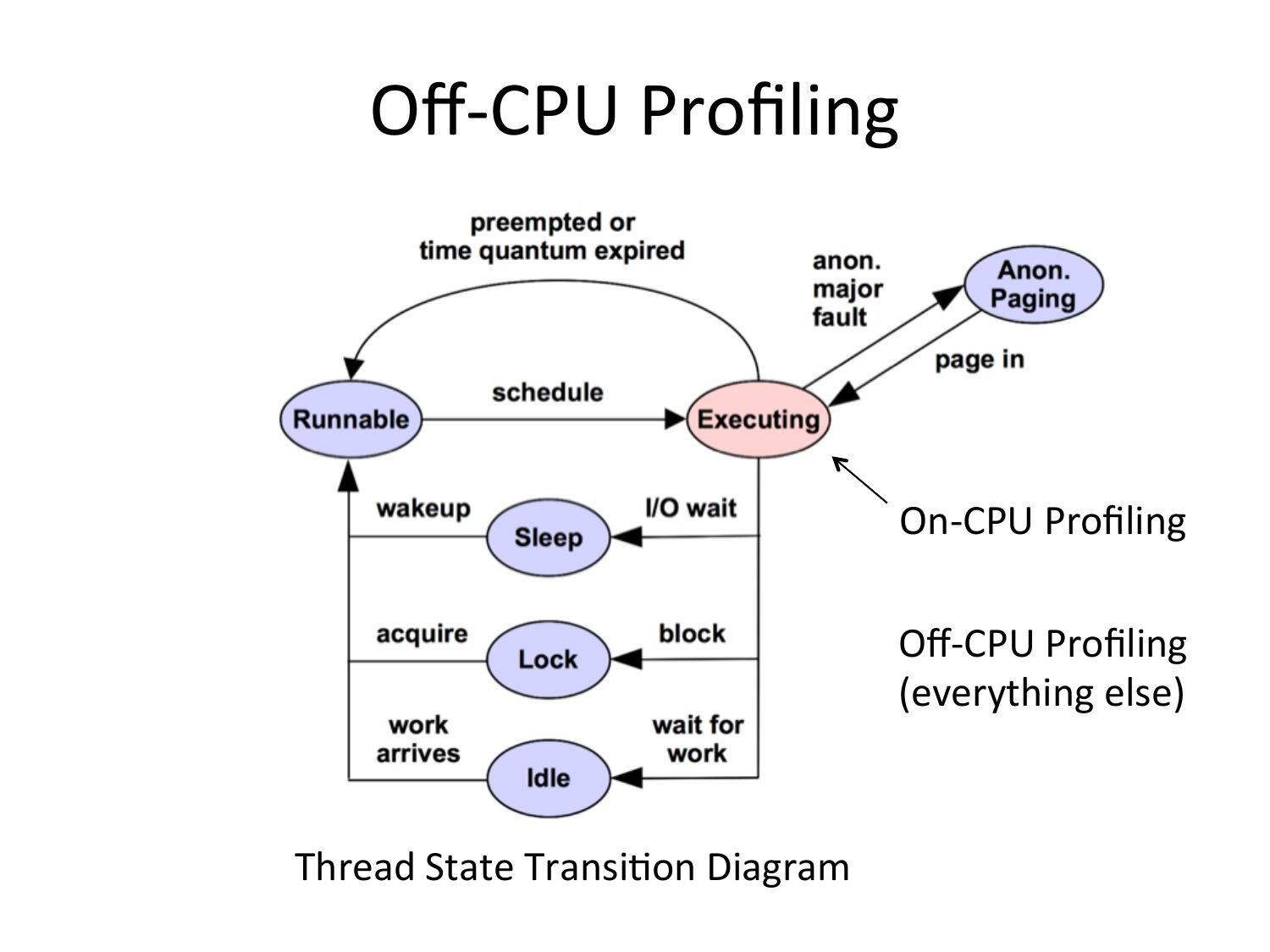

slide 44:

Off-‐CPU

Profiling

On-‐CPU

Profiling

Off-‐CPU

Profiling

(everything

else)

Thread

State

Transi=on

Diagram

slide 45:

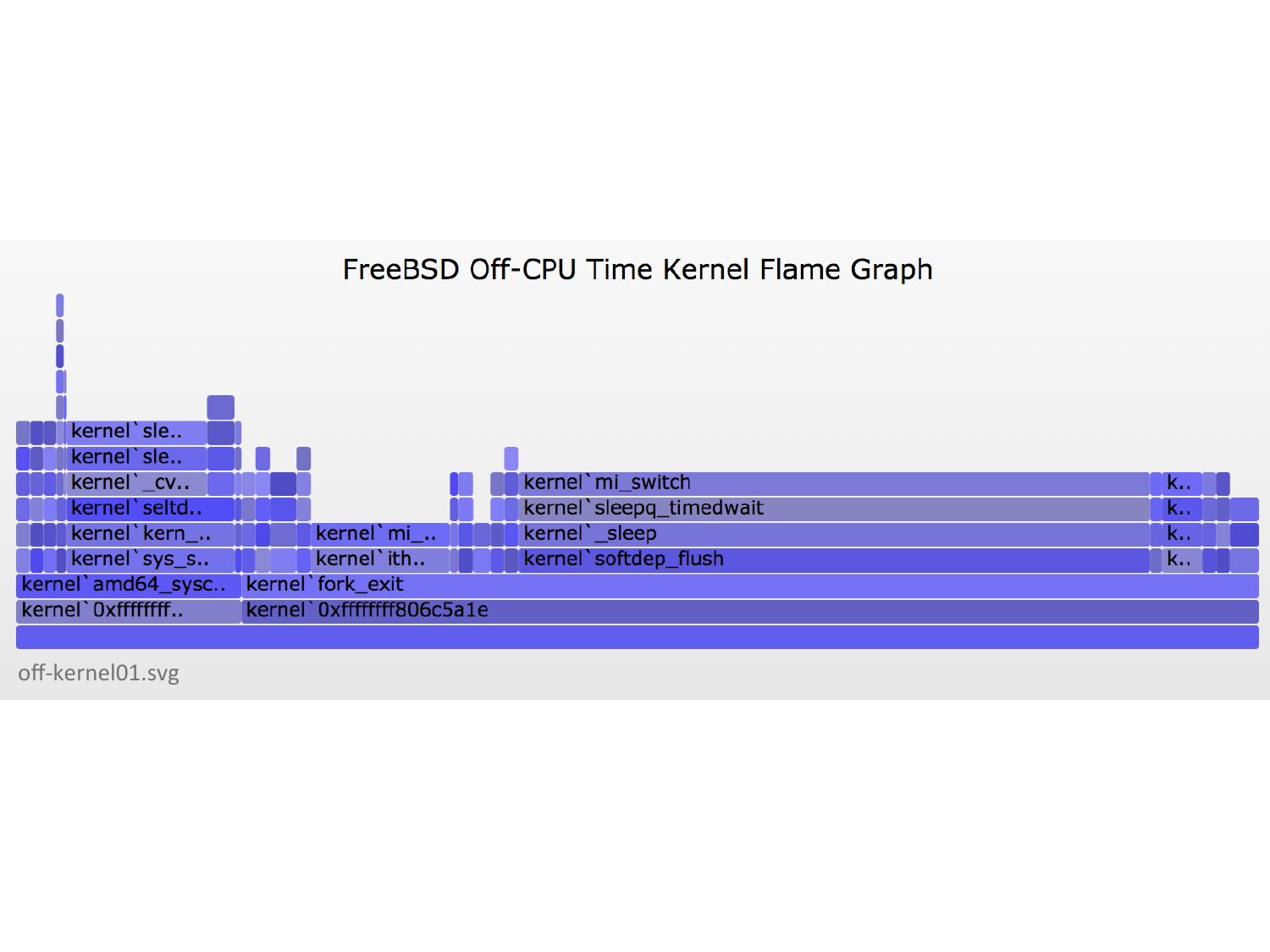

off-‐kernel01.svg

slide 46:

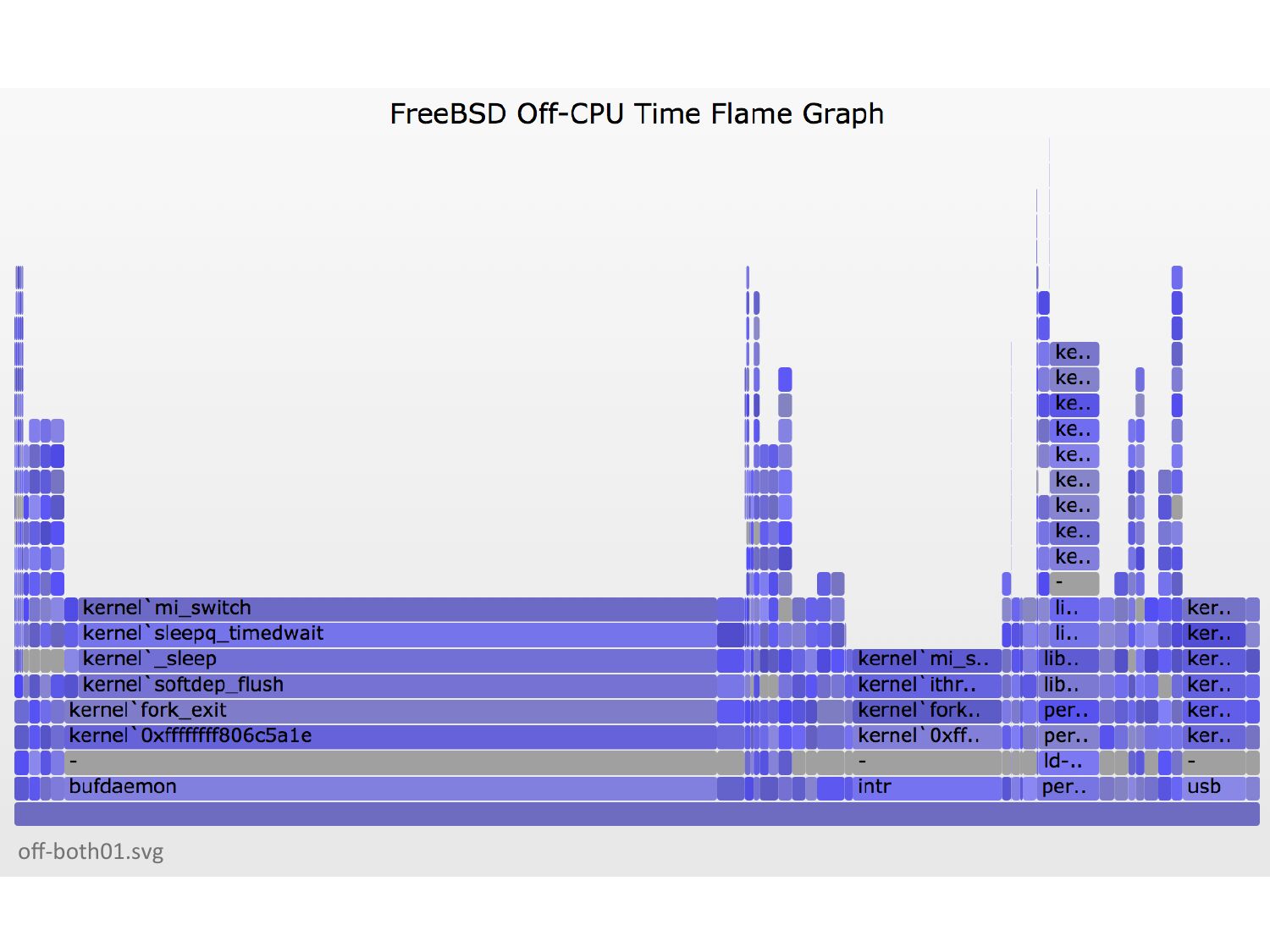

off-‐both01.svg

slide 47:

DEMO

slide 48:

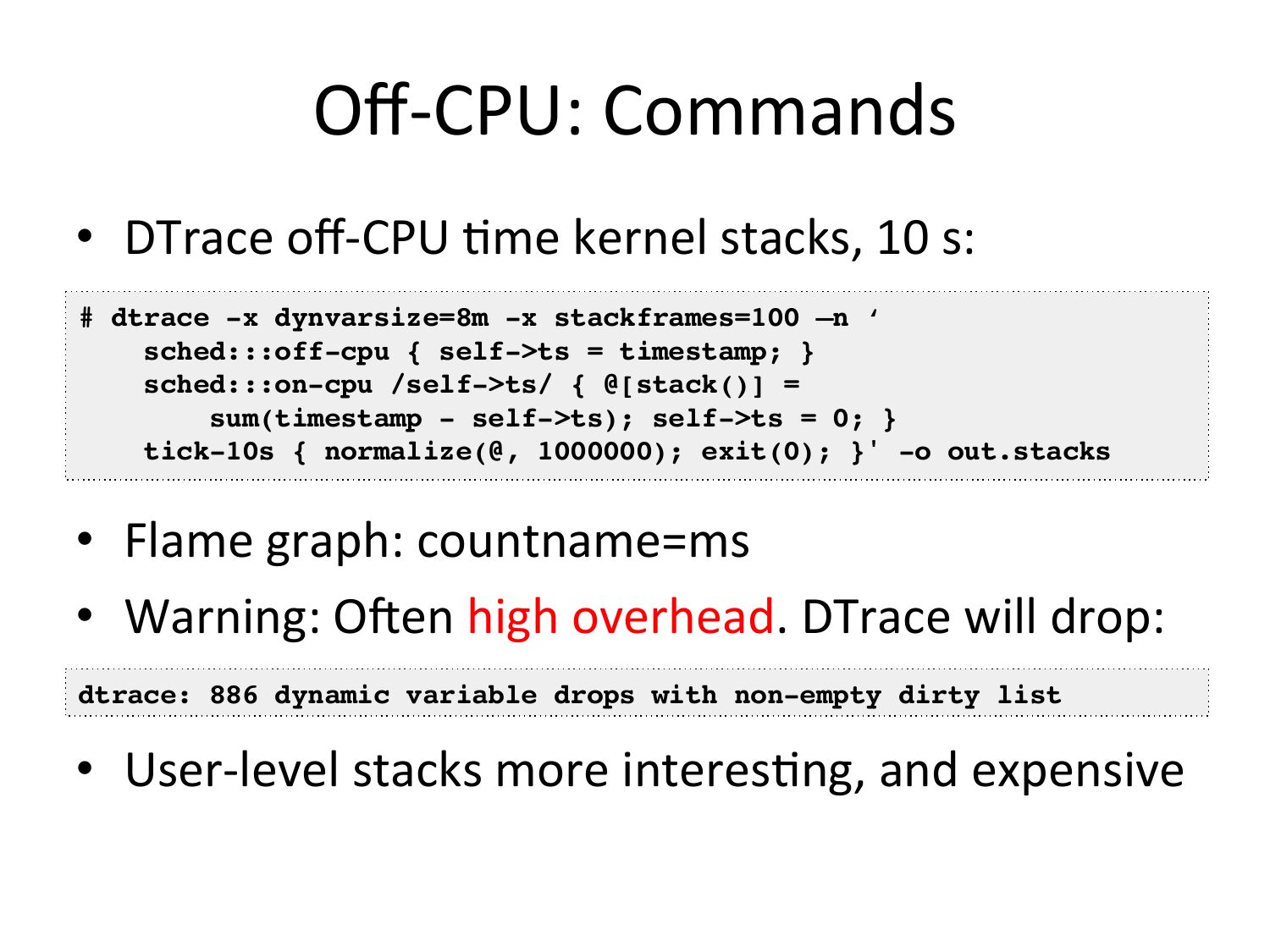

Off-‐CPU:

Commands

• DTrace

off-‐CPU

=me

kernel

stacks,

10

s:

# dtrace -x dynvarsize=8m -x stackframes=100 –n ‘!

sched:::off-cpu { self->gt;ts = timestamp; }!

sched:::on-cpu /self->gt;ts/ { @[stack()] =!

sum(timestamp - self->gt;ts); self->gt;ts = 0; }!

tick-10s { normalize(@, 1000000); exit(0); }' -o out.stacks!

• Flame

graph:

countname=ms

• Warning:

Ofen

high

overhead.

DTrace

will

drop:

dtrace: 886 dynamic variable drops with non-empty dirty list!

• User-‐level

stacks

more

interes=ng,

and

expensive

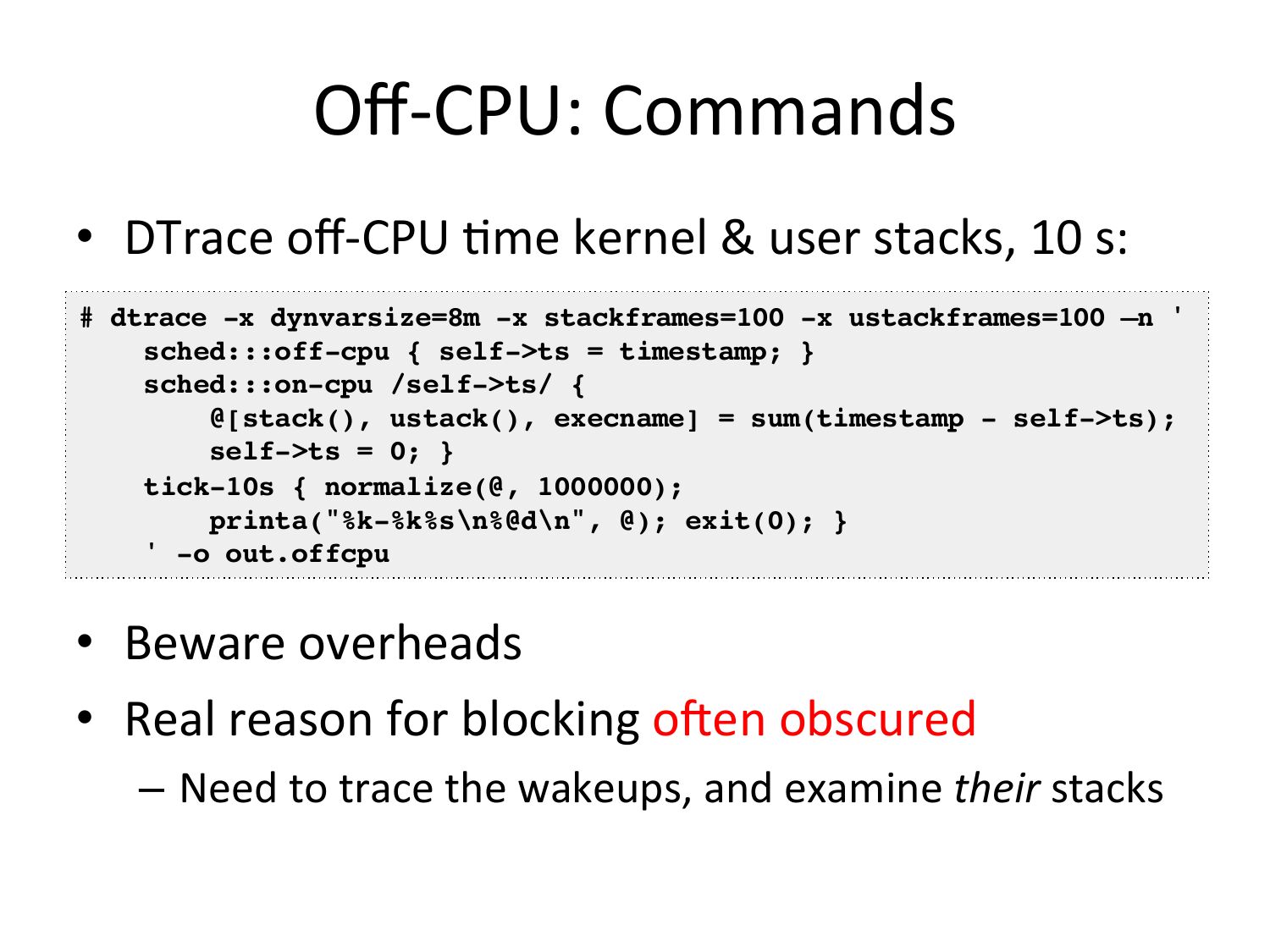

slide 49:

Off-‐CPU:

Commands

• DTrace

off-‐CPU

=me

kernel

&

user

stacks,

10

s:

# dtrace -x dynvarsize=8m -x stackframes=100 -x ustackframes=100 –n '!

sched:::off-cpu { self->gt;ts = timestamp; }

sched:::on-cpu /self->gt;ts/ {!

@[stack(), ustack(), execname] = sum(timestamp - self->gt;ts);!

self->gt;ts = 0; }!

tick-10s { normalize(@, 1000000);!

printa("%k-%k%s\n%@d\n", @); exit(0); }!

' -o out.offcpu!

• Beware

overheads

• Real

reason

for

blocking

ofen

obscured

– Need

to

trace

the

wakeups,

and

examine

their

stacks

slide 50:

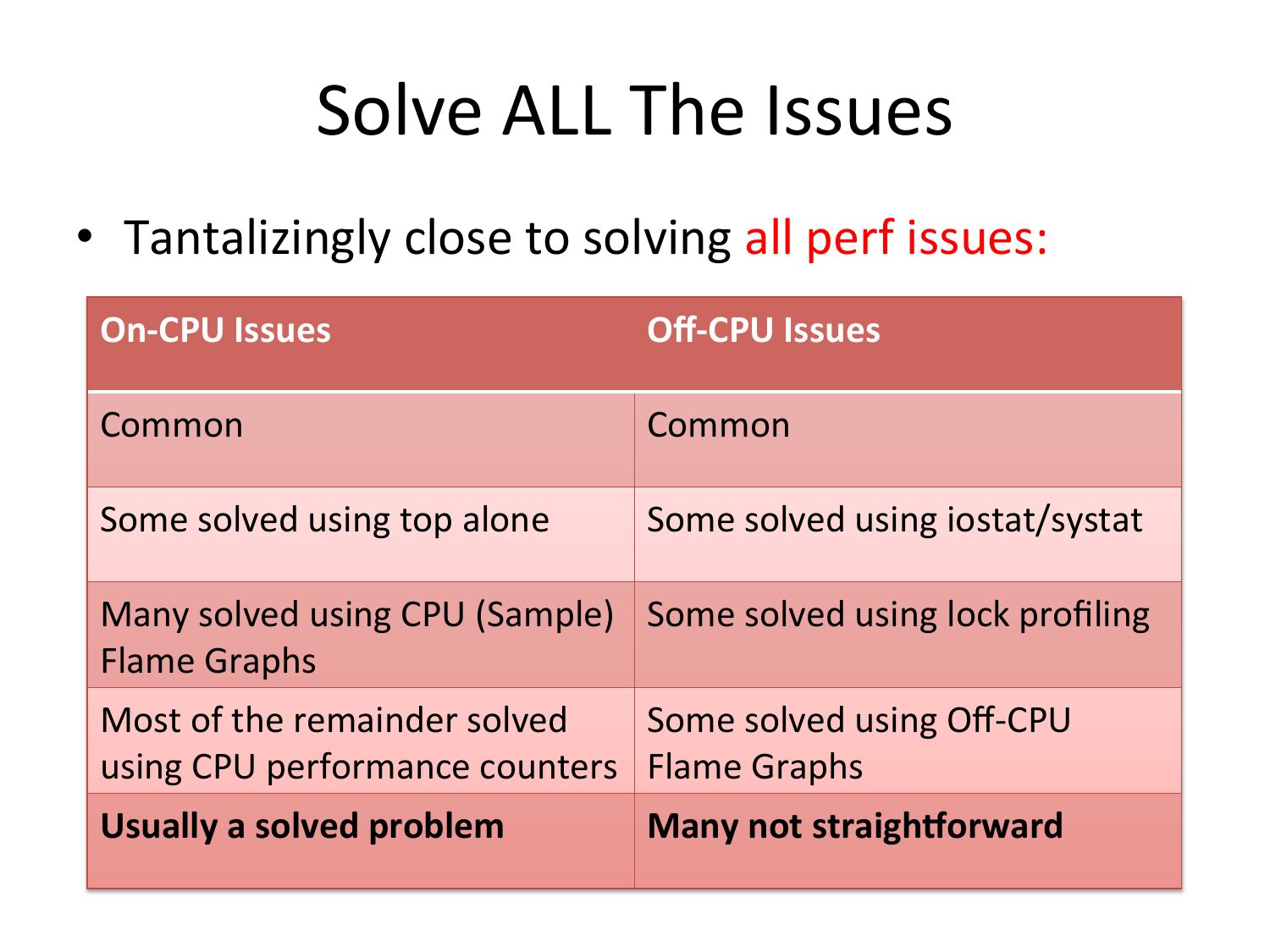

Solve

ALL

The

Issues

• Tantalizingly

close

to

solving

all

perf

issues:

On-‐CPU

Issues

Off-‐CPU

Issues

Common

Common

Some

solved

using

top

alone

Some

solved

using

iostat/systat

Many

solved

using

CPU

(Sample)

Some

solved

using

lock

profiling

Flame

Graphs

Most

of

the

remainder

solved

Some

solved

using

Off-‐CPU

using

CPU

performance

counters

Flame

Graphs

Usually

a

solved

problem

Many

not

straigh=orward

slide 51:

7.

Chain

Graphs

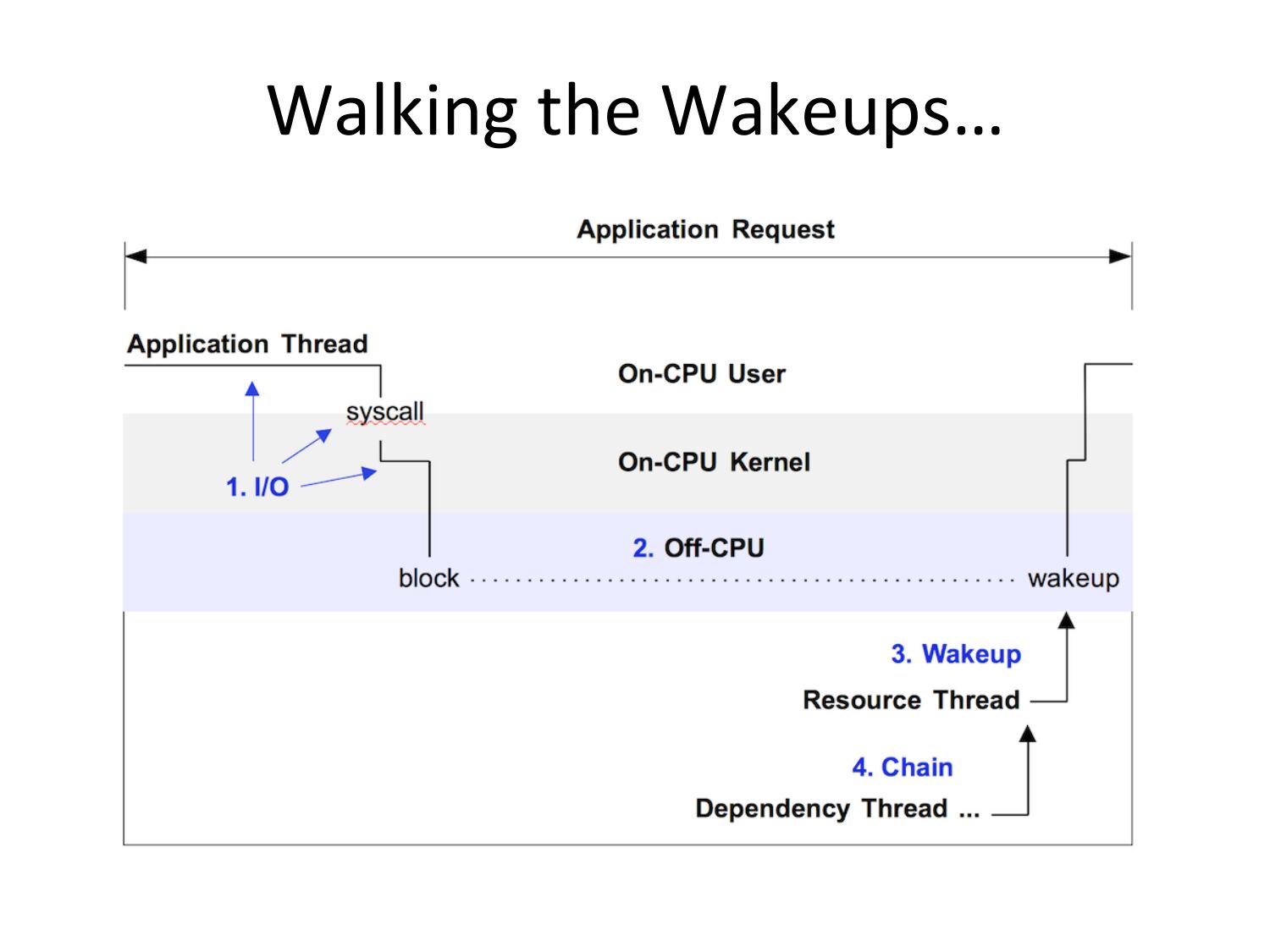

slide 52:

Walking

the

Wakeups…

slide 53:

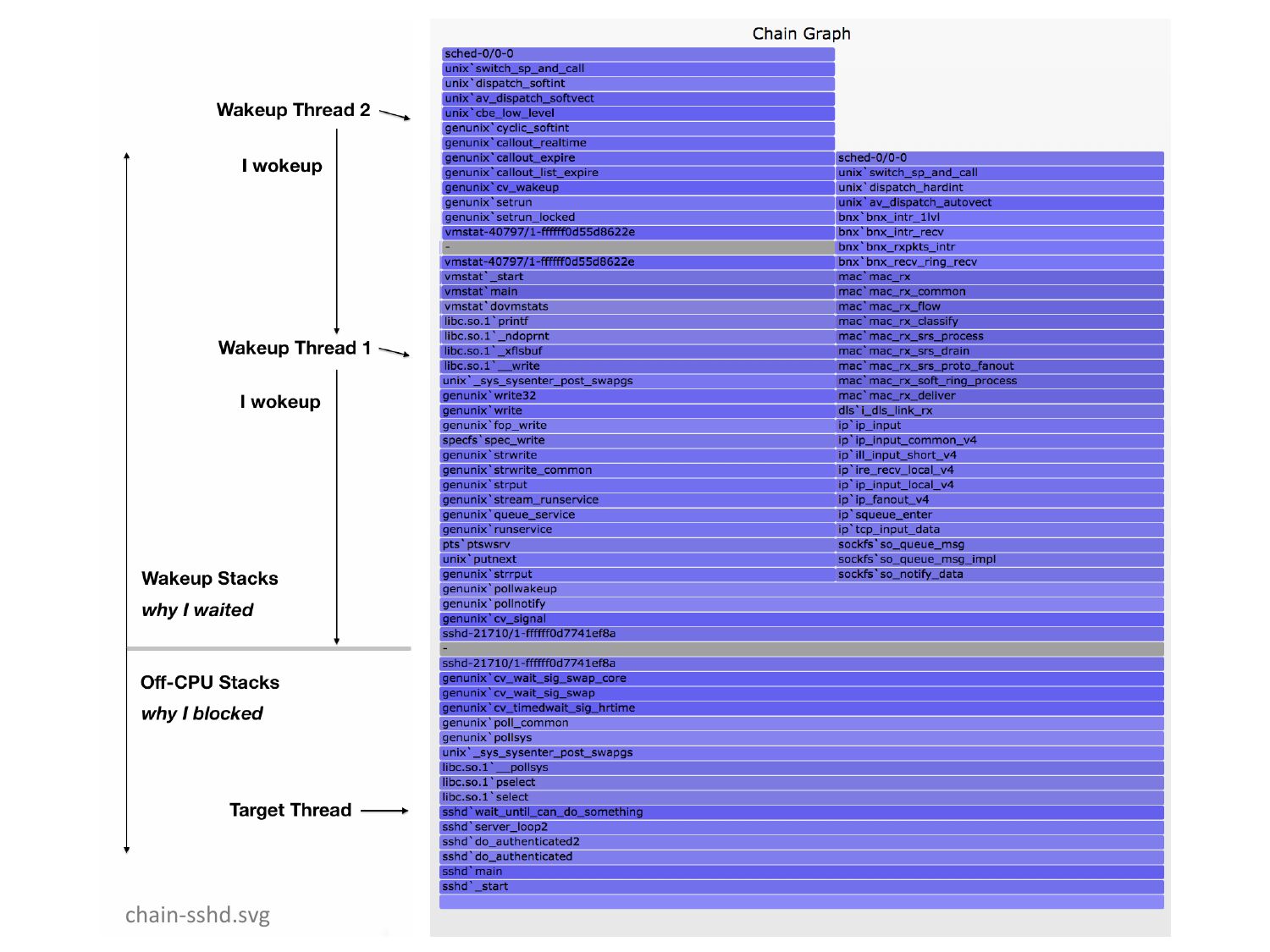

chain-‐sshd.svg

slide 54:

DEMO

slide 55:

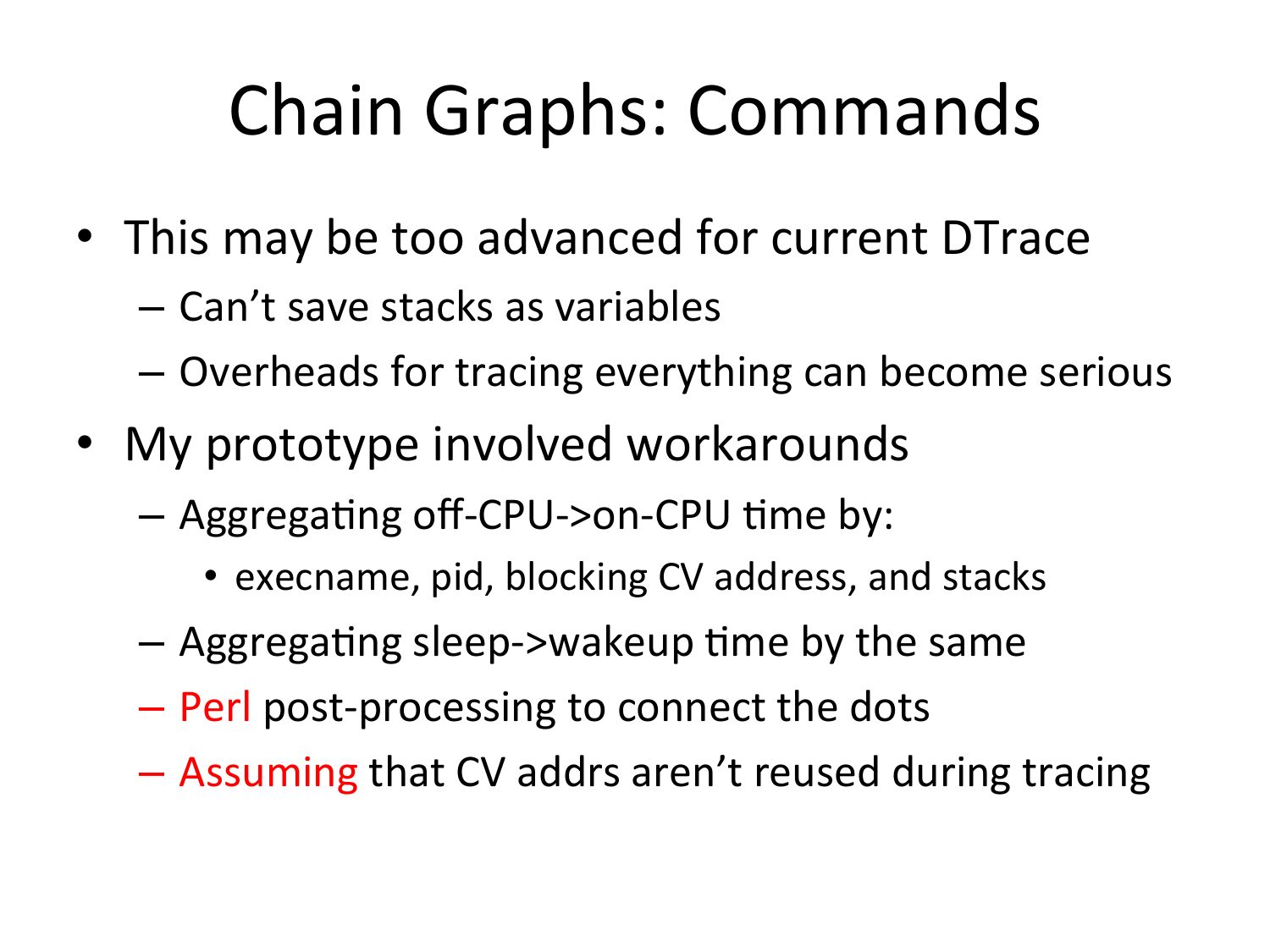

Chain

Graphs:

Commands

• This

may

be

too

advanced

for

current

DTrace

– Can’t

save

stacks

as

variables

– Overheads

for

tracing

everything

can

become

serious

• My

prototype

involved

workarounds

– Aggrega=ng

off-‐CPU-‐>gt;on-‐CPU

=me

by:

• execname,

pid,

blocking

CV

address,

and

stacks

– Aggrega=ng

sleep-‐>gt;wakeup

=me

by

the

same

– Perl

post-‐processing

to

connect

the

dots

– Assuming

that

CV

addrs

aren’t

reused

during

tracing

slide 56:

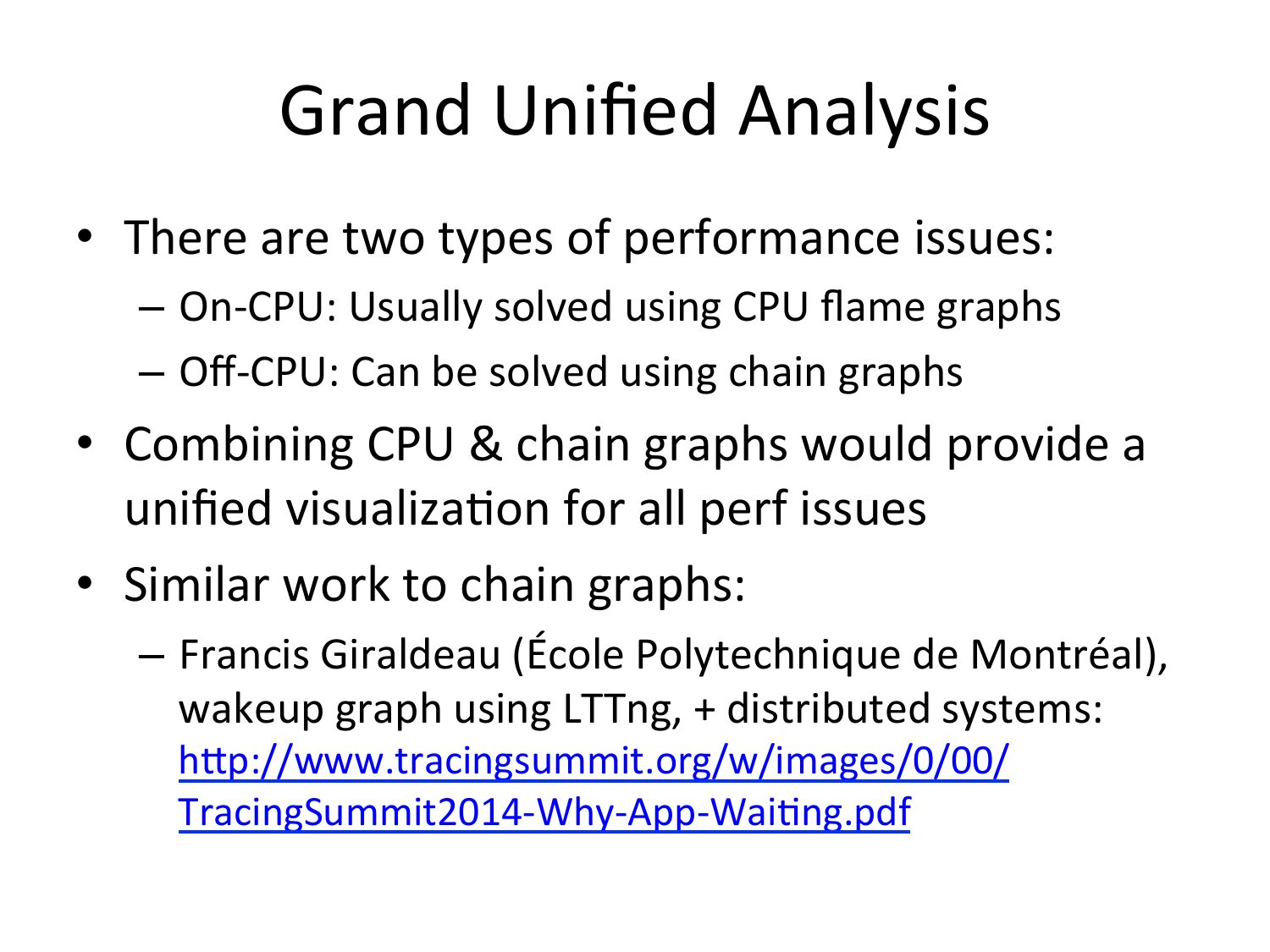

Grand

Unified

Analysis

• There

are

two

types

of

performance

issues:

– On-‐CPU:

Usually

solved

using

CPU

flame

graphs

– Off-‐CPU:

Can

be

solved

using

chain

graphs

• Combining

CPU

&

chain

graphs

would

provide

a

unified

visualiza=on

for

all

perf

issues

• Similar

work

to

chain

graphs:

– Francis

Giraldeau

(École

Polytechnique

de

Montréal),

wakeup

graph

using

LTTng,

+

distributed

systems:

hup://www.tracingsummit.org/w/images/0/00/

TracingSummit2014-‐Why-‐App-‐Wai=ng.pdf

slide 57:

Other

Topics

slide 58:

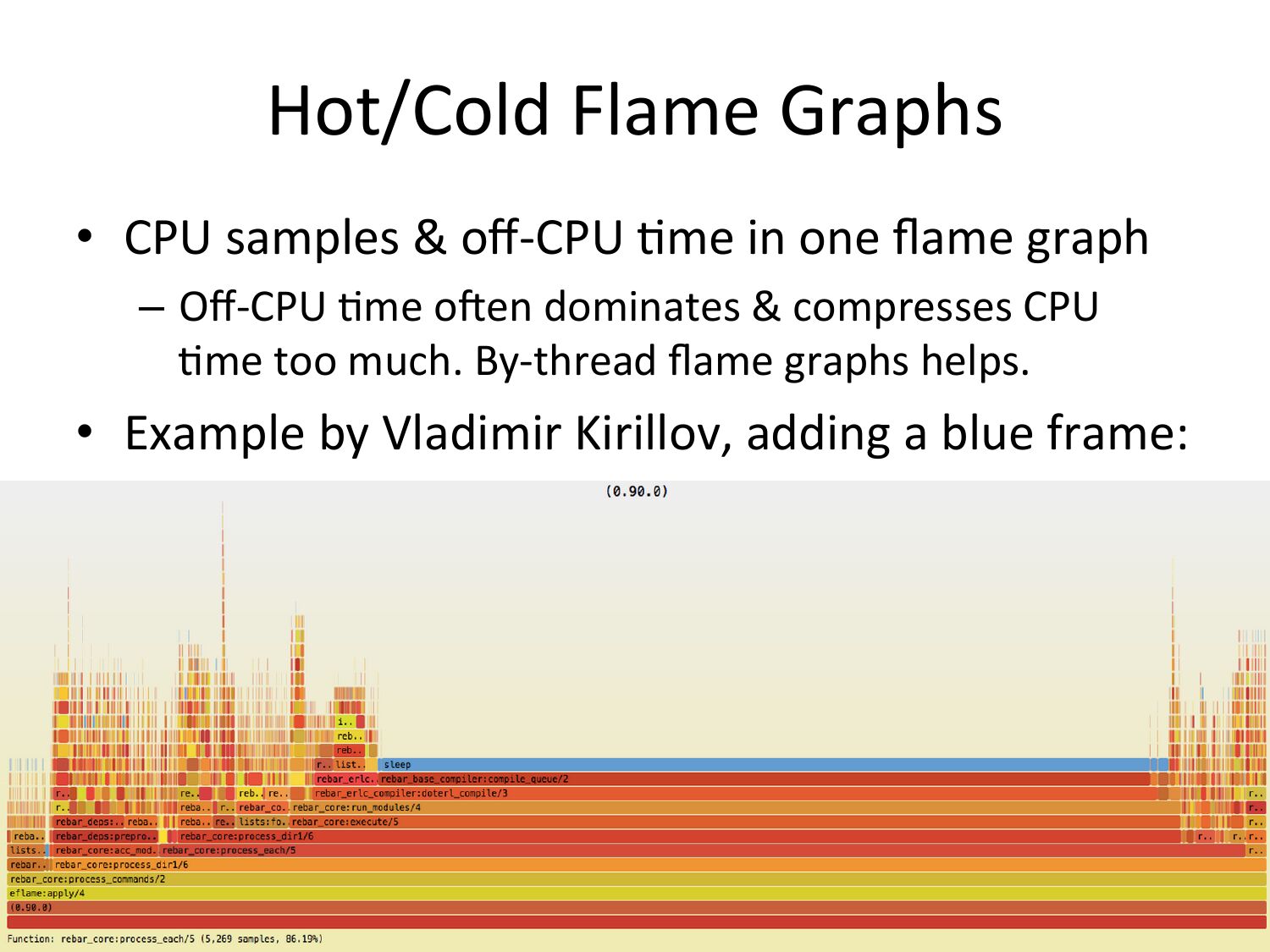

Hot/Cold

Flame

Graphs

• CPU

samples

&

off-‐CPU

=me

in

one

flame

graph

– Off-‐CPU

=me

ofen

dominates

&

compresses

CPU

=me

too

much.

By-‐thread

flame

graphs

helps.

• Example

by

Vladimir

Kirillov,

adding

a

blue

frame:

slide 59:

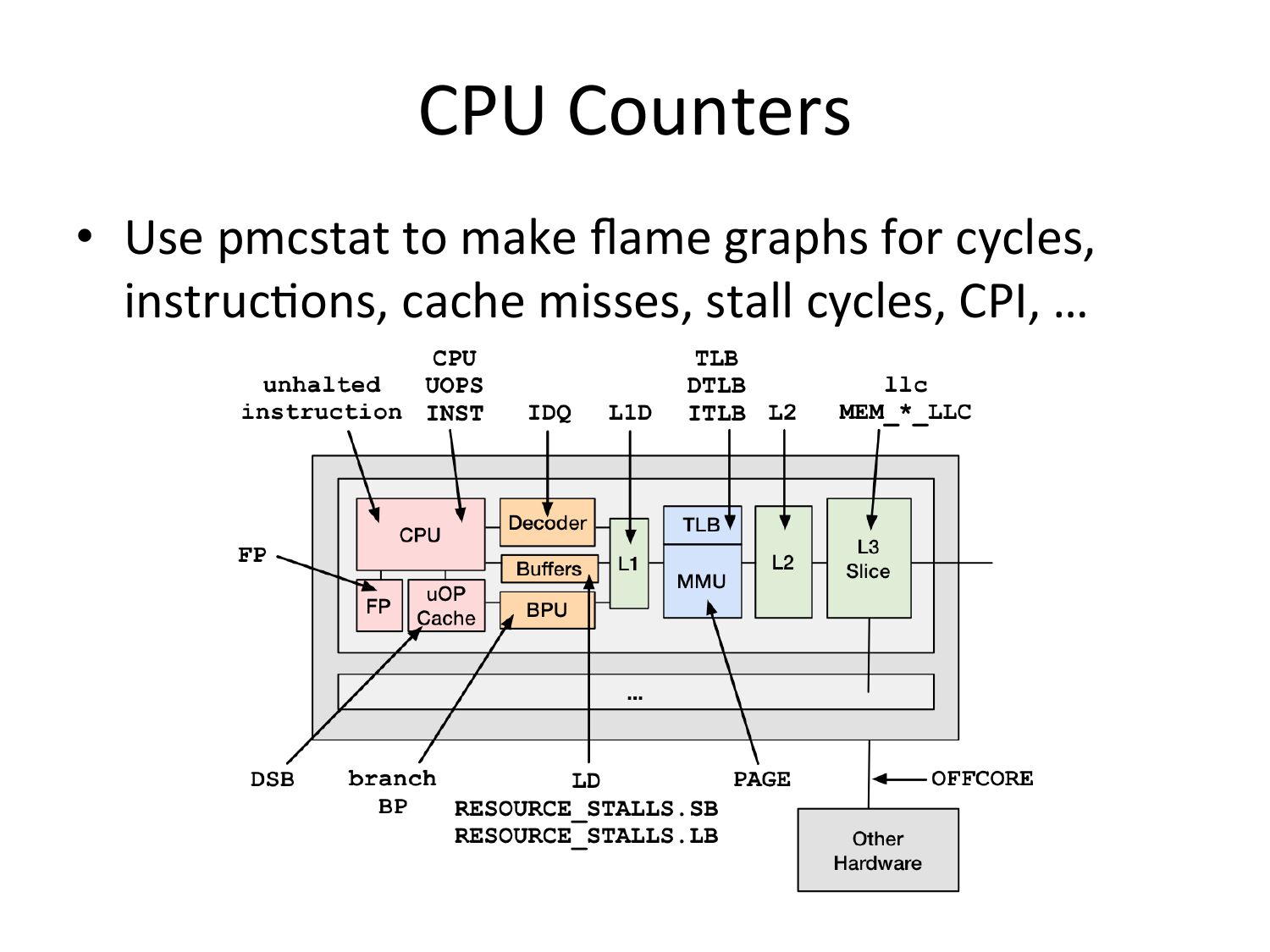

CPU

Counters

• Use

pmcstat

to

make

flame

graphs

for

cycles,

instruc=ons,

cache

misses,

stall

cycles,

CPI,

…

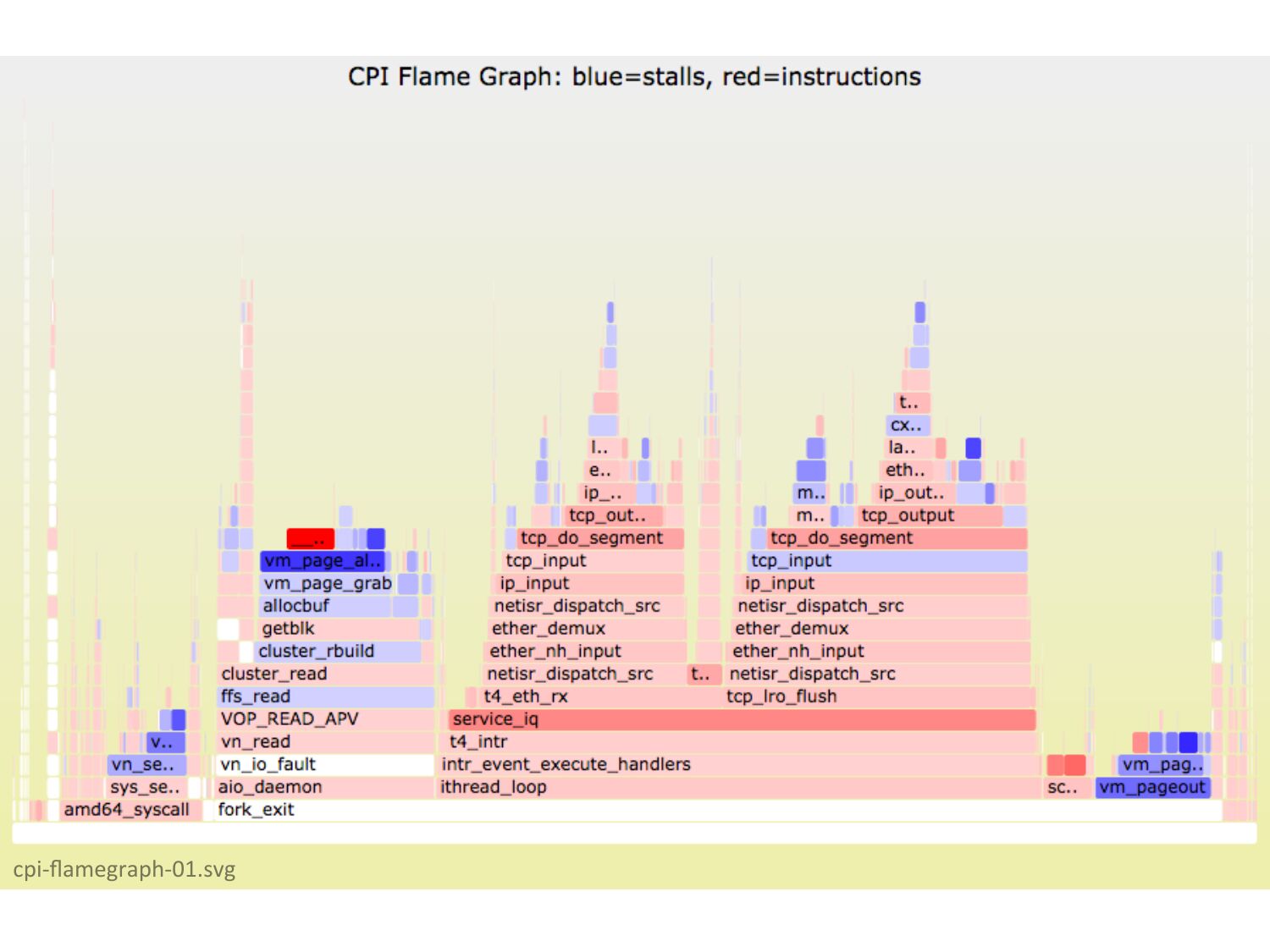

slide 60:

cpi-‐flamegraph-‐01.svg

slide 61:

Differen=al

Flame

Graphs

• Just

added

(used

to

make

the

CPI

flame

graph)

• Useful

for

non-‐regression

tes=ng:

hue

shows

difference

between

profile1

and

profile2

slide 62:

Flame

Charts

• Similar

to

flame

graphs,

but

different

x-‐axis

• x-‐axis:

passage

of

=me

• Created

by

Google

for

Chrome/v8,

inspired

by

flame

graphs

• Needs

=mestamped

stacks

– Flame

graphs

just

need

stacks

&

counts,

which

is

usually

much

less

data

slide 63:

Other

Implementa=ons/Uses

• See

hup://www.brendangregg.com/flamegraphs.html#Updates

• Some

use

applica=on

profile

sources,

which

should

work

on

FreeBSD

• Thanks

everyone

who

has

contributed!

slide 64:

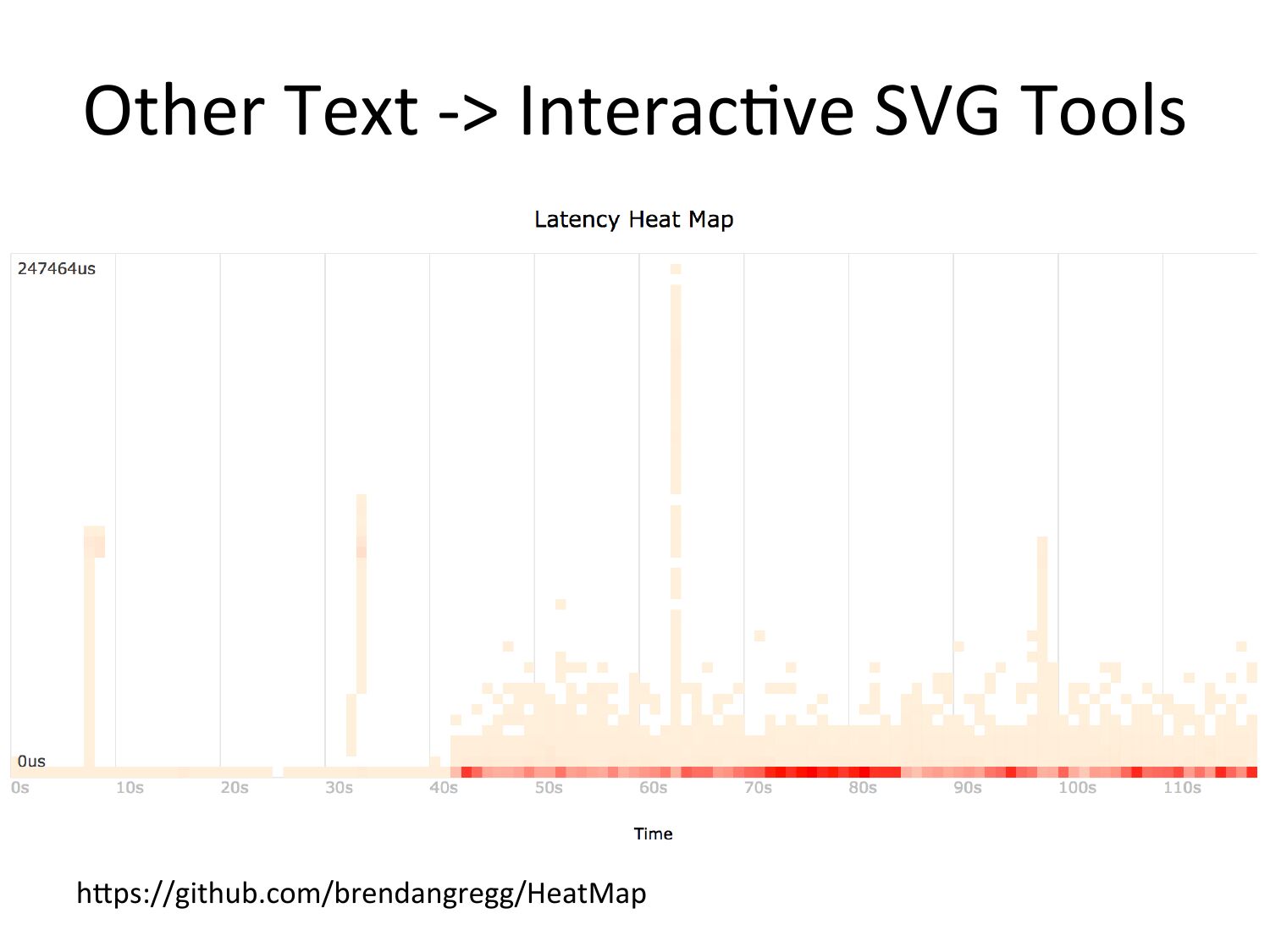

Other

Text

-‐>gt;

Interac=ve

SVG

Tools

• Heatmaps:

hups://github.com/brendangregg/HeatMap

slide 65:

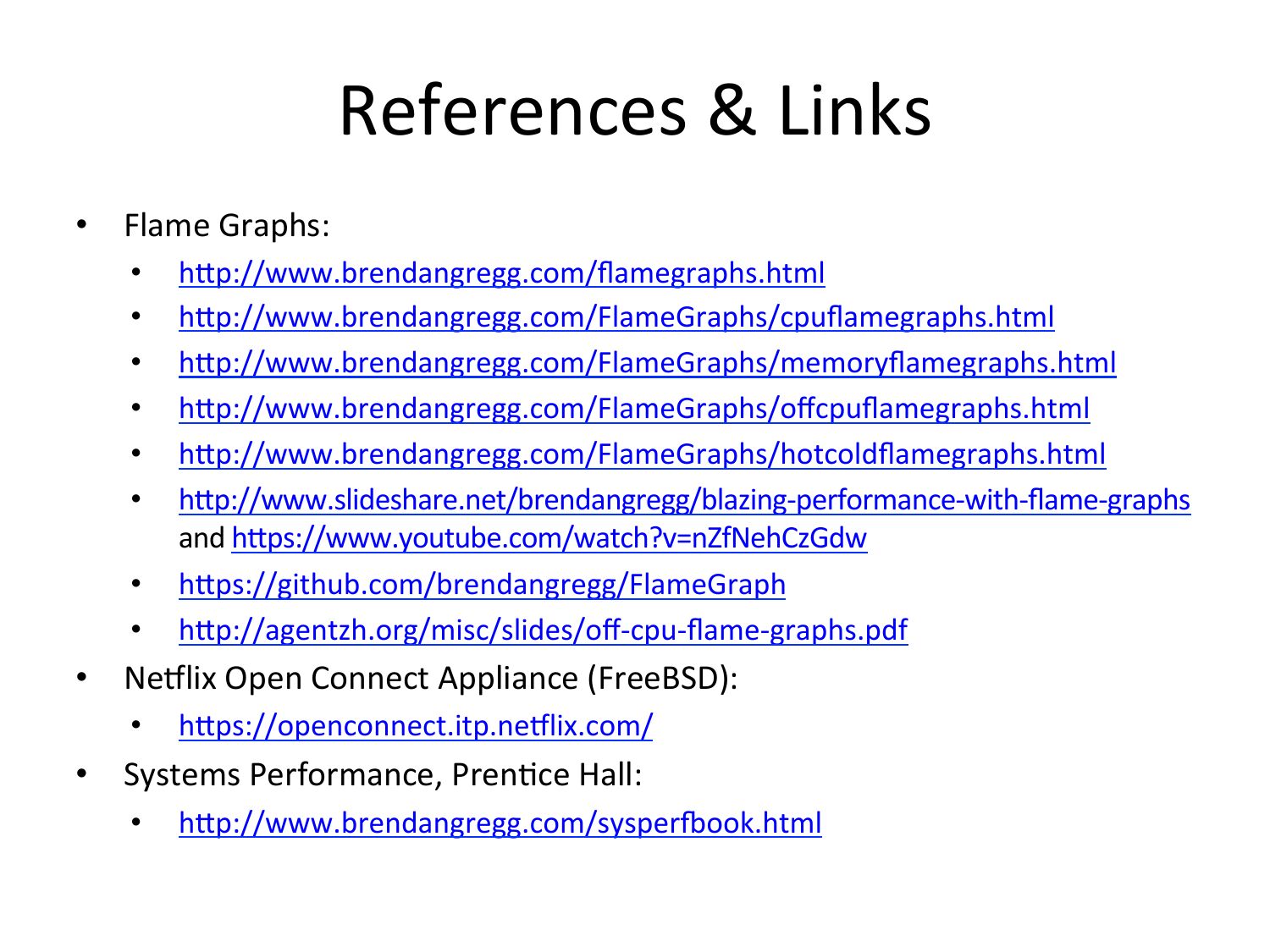

References

&

Links

Flame

Graphs:

Ne5lix

Open

Connect

Appliance

(FreeBSD):

hup://www.brendangregg.com/flamegraphs.html

hup://www.brendangregg.com/FlameGraphs/cpuflamegraphs.html

hup://www.brendangregg.com/FlameGraphs/memoryflamegraphs.html

hup://www.brendangregg.com/FlameGraphs/offcpuflamegraphs.html

hup://www.brendangregg.com/FlameGraphs/hotcoldflamegraphs.html

hup://www.slideshare.net/brendangregg/blazing-‐performance-‐with-‐flame-‐graphs

and

hups://www.youtube.com/watch?v=nZfNehCzGdw

hups://github.com/brendangregg/FlameGraph

hup://agentzh.org/misc/slides/off-‐cpu-‐flame-‐graphs.pdf

hups://openconnect.itp.ne5lix.com/

Systems

Performance,

Pren=ce

Hall:

hup://www.brendangregg.com/sysper€ook.html

slide 66:

Thanks

• Ques=ons?

• hup://slideshare.net/brendangregg

• hup://www.brendangregg.com

• bgregg@ne5lix.com

• @brendangregg